Brands invest in Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) to control how they appear in AI-generated answers. These practices address external visibility in tools like ChatGPT, Perplexity, and Gemini. They answer a real question: when someone asks an AI assistant about your category, does your brand show up?

But visibility is only half the story. Once companies build their own AI products—copilots, assistants, agents—they face a different problem. What happens inside those products? How do users actually interact with them? Where do they succeed or fail? External visibility tools were never designed to answer these questions.

The rise of AI visibility tools

Search is becoming conversational. People ask questions instead of typing keywords. They expect direct answers instead of ten blue links. This shift creates a new challenge for brands: how do you appear in an AI's response when there are no traditional search rankings?

AEO focuses on structuring content so AI systems can reference it accurately. This means clear headings, structured data, and authoritative sources. The goal is to be cited when users ask questions related to your domain.

GEO extends this concept across multiple generative AI platforms. It optimizes for how different AI engines synthesize and present information. Both practices emerged because brands realized traditional SEO strategies do not guarantee visibility in AI answers.

The value here is straightforward. If your competitors appear in AI responses and you do not, you lose mindshare. Visibility tools track citations, monitor brand mentions, and measure share of voice across AI platforms. They operate externally, watching how third-party systems represent you.

What AEO and GEO actually measure

AEO and GEO tools focus on external AI engines. They track whether your content gets cited. They monitor how often your brand appears. They measure your presence relative to competitors.

This is similar to how SEO focused on rankings. You want to know where you stand. Are you visible? Are you authoritative? The object of analysis is always outside your control. You optimize your content, but the AI engine decides how to use it.

These tools do not tell you what happens after visibility. They cannot track user behavior inside your own AI products. They measure discovery, not usage.

The blind spot starts after visibility

Many companies now run internal AI systems. Employees use copilots to draft documents, analyze data, or automate workflows. Customers interact with AI assistants to get support, complete transactions, or find information. These are not external search engines. They are your products.

Once a user enters your AI product, visibility metrics become irrelevant. The question shifts from "are we discoverable?" to "are we delivering value?" You need to understand what users are trying to accomplish. You need to know where they get stuck. You need to measure satisfaction, trust, and adoption.

This is not a search problem. It is a product analytics problem. Traditional tools like Google Analytics or Mixpanel were built for click-based interfaces. They track page views and button clicks. They cannot analyze conversations. They cannot detect intent. They cannot measure sentiment in natural language interactions.

This gap is where most AI teams operate blind.

Why Nebuly is not an AEO or GEO tool

Nebuly does not optimize content for external AI engines. It does not track citations in ChatGPT or Gemini. It does not help you rank in AI answers. That is not the problem it solves.

Nebuly is the first platform built specifically to track user analytics inside GenAI and agentic AI products. It analyzes how people actually use your AI systems. It focuses on conversations, not clicks. It measures intent, not impressions.

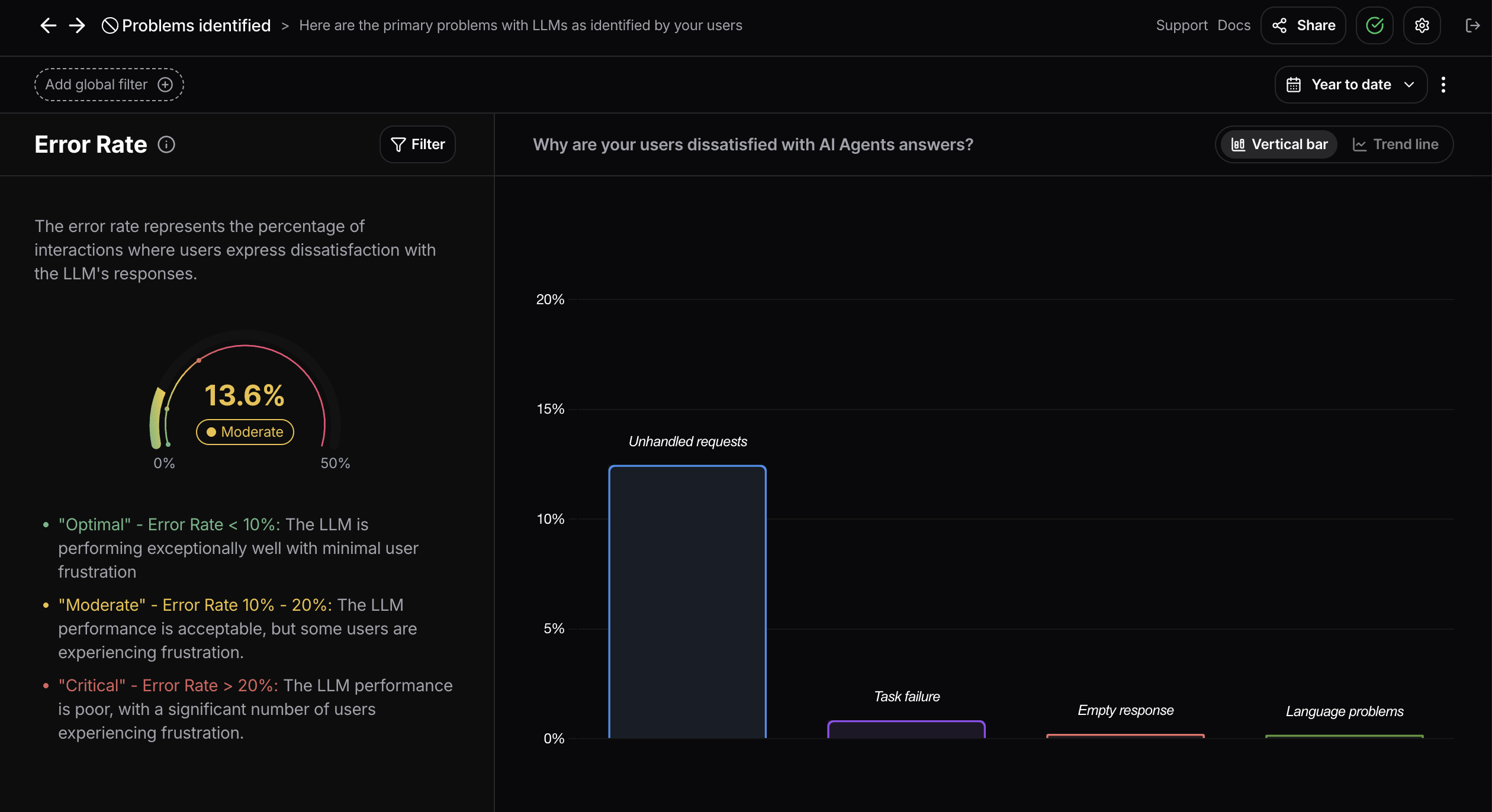

When a user interacts with your copilot, Nebuly captures what they asked, how the AI responded, whether they were satisfied, and whether they succeeded. It identifies patterns across thousands or millions of interactions. It reveals which tasks users attempt most often, where friction occurs, and which features drive adoption.

The value is in making the invisible visible. Without this layer of analytics, AI teams operate on assumptions. They do not know what users actually need. They cannot measure whether their product is working. They cannot prove ROI. Nebuly provides the data layer that makes AI products measurable, improvable, and accountable.

This is fundamentally different from visibility optimization. AEO and GEO help users find you. Nebuly helps you serve them once they arrive.

Two layers of a conversational world

AI changes how people discover information. It also changes how people interact with software. These are two separate layers, and they require different tools.

The first layer is discovery. This is where AEO and GEO operate. Brands need to be visible in AI-generated answers. They need to appear when users ask relevant questions. The challenge is external. The tools are visibility-focused.

The second layer is interaction. This is where Nebuly operates. Companies need to understand how users interact with their own AI products. They need to measure success, failure, and satisfaction. The challenge is internal. The tools are analytics-focused.

Confusing these layers leads to bad decisions. You would not use an SEO tool to understand why users abandon your website. The same logic applies here. Visibility tools cannot tell you why users stop using your copilot. They cannot explain why adoption stalls. They cannot identify which features create value.

Both layers matter. But they solve different problems at different moments. A strong AI strategy requires both.

What internal AI teams actually need to see

Inside AI products, teams need answers to different questions. Which intents dominate usage? Which tasks do users fail to complete? Where does sentiment turn negative? Which topics generate repeated friction?

These signals do not exist in server logs. They do not appear in visibility dashboards. They come from analyzing conversations as user behavior. This requires purpose-built analytics.

Nebuly processes conversations at scale. It identifies intent patterns, measures task completion, tracks sentiment shifts, and flags friction points. It does this without compromising privacy, with self-hosted deployment options for regulated industries.

Product teams use this data to improve UX. AI teams use it to refine prompts and workflows. Executives use it to prove ROI. Compliance teams use it to detect risk. The platform turns conversational interactions into actionable insights.

This is what user analytics means in the GenAI era. It is not about page views or session duration. It is about understanding what users are trying to do and whether your AI helps them succeed.

The practical takeaway

AEO and GEO help users find you. User analytics helps you serve them once they arrive. One does not replace the other. They solve different problems at different moments.

In a conversational world, strong AI strategy requires both. Visibility ensures discovery. Analytics ensures delivery. Teams that invest in only one layer will struggle. They will either be invisible or ineffective.

Nebuly exists because the second layer has been missing. GenAI and agentic AI products are fundamentally conversational. They require analytics built for conversations. Purpose-built user analytics is not optional. It is the foundation for adoption, trust, and ROI in AI products. If you're curious to see how it works, book a demo with us.

Frequently Asked Questions (FAQs)

.png)