The promise is always the same. GenAI will reduce support tickets. It'll streamline operations. It'll give customers instant answers, 24/7.

Then you launch. Users drop off mid-conversation. They rephrase the same question three times. They give up and call your support team anyway. 54% of GenAI projects never make it to full deployment, not because the models are broken, but because the user experience is.

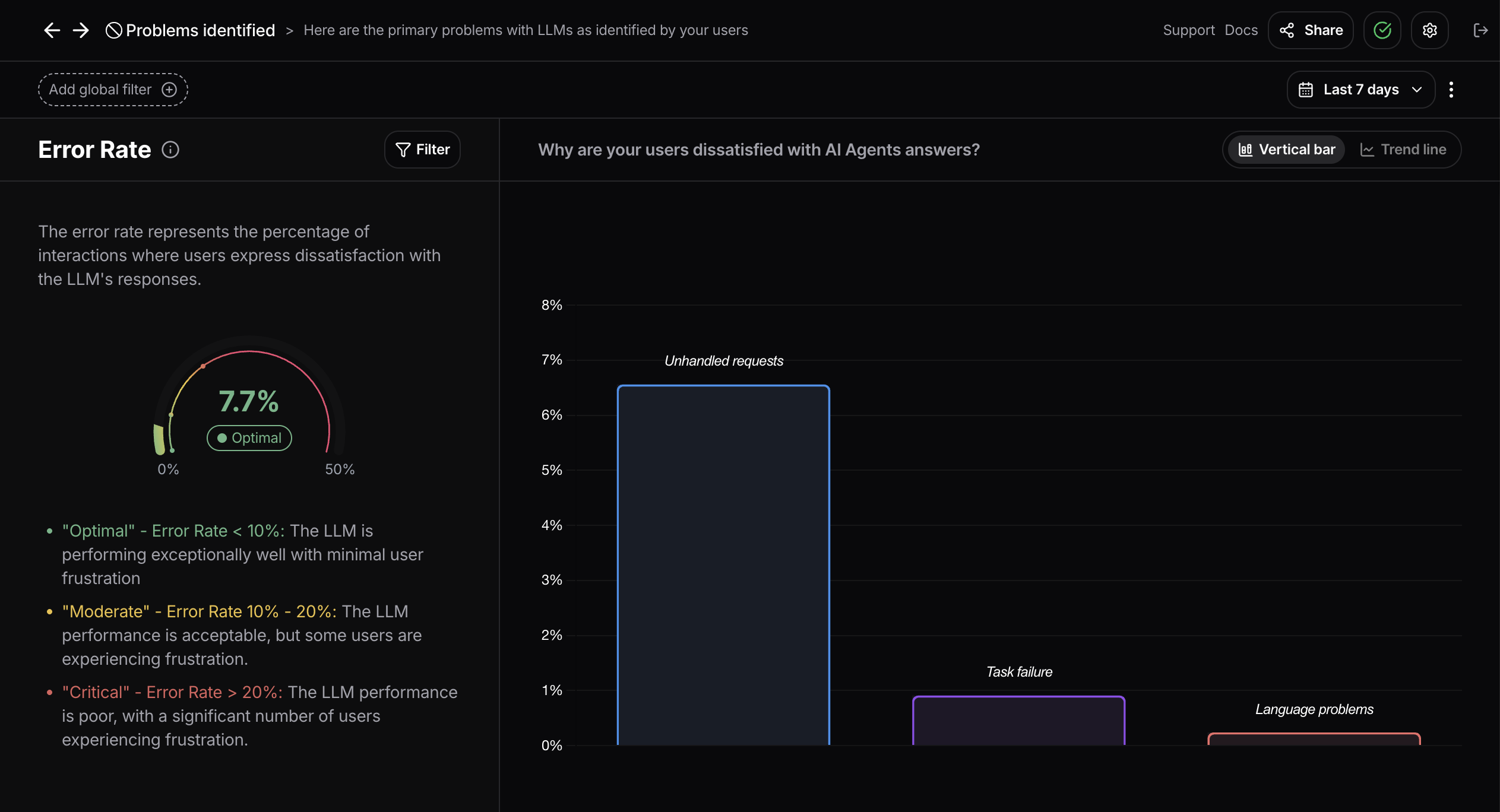

When teams look at "AI failures," they default to infrastructure metrics. Latency. Token costs. Hallucination rates. Those matter. But they miss what's actually killing ROI: the ways users experience failure in conversation. The moments they realize the AI can't help them and leave.

That's why we built Failure Intelligence: a taxonomy of six user-facing failure types. This isn't about monitoring your infrastructure. It's about understanding where your product is failing users.

The hidden failure problem in production AI

Your AI agent works. Technically, at least. It responds to queries. It doesn't crash. From an infrastructure perspective, everything looks fine.

But most AI systems fail users in ways traditional monitoring doesn't capture. A user asks a question that's slightly outside your policy. The AI refuses, but doesn't explain why. The user tries again, gets the same wall, and leaves. Zero errors logged. One lost user.

McKinsey's 2025 State of AI report shows that while 65% of organizations use generative AI regularly, only 39% report EBIT impact at the enterprise level. The gap isn't technical capability. It's that failures happen at the user experience layer, invisible to infrastructure logs.

Here is what actually matters: The user failure stack

Infrastructure monitoring tells you when your system breaks. It doesn't tell you when your users give up.

We built Failure Intelligence to track failures from the user's perspective instead. What does the user see when something goes wrong? The same technical error—say, a RAG retrieval failure—can show up as different user failures depending on context. That's why categorizing by user experience matters more than categorizing by backend error codes.

Here are the six types:

1. Unhandled requests

- What it is: The AI refuses or fails to respond to a legitimate user request, often because of policy constraints, missing tools, or guardrail triggers.

- Example conversation:

User: "Can you help me file a claim for water damage?"

AI: "I'm unable to assist with that request."

User: "Why not?"

AI: "I don't have access to that information."- Common causes: Request falls outside defined scope. Tool or integration unavailable. Overly restrictive safety filters blocking benign queries.

- What to track: Volume of refusals per session, refusal rate by topic, user drop-off after refusal.

- How to fix: Expand policy boundaries, add tools, tune safety thresholds, provide explanatory fallback responses.

2. Task failure requests

- What it is: The AI starts to help but delivers incomplete, incorrect, or unusable output. The user's task doesn't get done.

- Example conversation:

User: "What's the status of my insurance claim #3847?"

AI: "I'm unable to access claim status at the moment due to a technical issue.

Please log in to your account or contact our claims team for the latest update."- Common causes: Missing or incomplete data in knowledge base. Context window limits truncating information. RAG retrieval returning partial matches.

- What to track: Task completion rate, follow-up clarification frequency, abandonment after partial responses.

- How to fix: Improve data coverage, optimize retrieval ranking, add structured outputs, implement task verification.

3. Language problems

- What it is: Mismatch between user language or locale and AI capability. This includes wrong-language responses, poor translations, or culturally inappropriate outputs.

- Example conversation:

User: "¿Cuál es el estado de mi pedido?"

AI: [responds in English] "I can help you check your order status."

User: [repeats in Spanish]

AI: [still responds in English]- Common causes: No multilingual support. Language detection failures. Training data skewed to primary language.

- What to track: Language mismatch rate, user language vs. response language, abandonment by locale.

- How to fix: Add multilingual models, implement language detection, route to language-specific agents.

4. Empty responses

- What it is: The AI returns nothing. Blank outputs, null responses, or errors that surface as silence.

- Example conversation:

User: "Show me my account balance."

AI: [no response]

User: "Hello?"

AI: [no response]- Common causes: Backend API failures surfacing as empty returns. Prompt engineering issues causing null outputs. Edge-case inputs breaking generation.

- What to track: Empty response rate, correlation with specific input patterns, recovery after empty response.

- How to fix: Add error handling, implement fallback responses, improve prompt robustness, surface API errors gracefully.

5. User frustration signals

- What it is: Implicit feedback that the user isn't getting what they need. Repeated rephrasing, escalation language, negative sentiment, or abandonment patterns.

- Example conversation:

User: "I need to update my billing info."

AI: "I can help with account settings."

User: "No, I need to UPDATE MY BILLING."

AI: "You can manage your account in settings."

User: "This isn't working." - Common causes: AI misunderstands intent repeatedly. Circular conversation loops. No escalation path offered.

- What to track: Rephrase frequency, sentiment decline across turns, caps/punctuation intensity, session abandonment velocity.

- How to fix: Improve intent detection, add escalation triggers, offer human handoff, shorten conversation paths.

6. Thumbs down feedback

- What it is: Explicit negative feedback. The clearest signal, but also the rarest. Research shows roughly 80% of dissatisfied users don't leave explicit feedback, making this the tip of the iceberg.

- Example: User rates response as "not helpful" or clicks thumbs down.

- Common causes: Wrong answer. Unhelpful tone. Task not completed. Missing information.

- What to track: Thumbs down rate, feedback by conversation topic, correlation with implicit frustration signals.

- How to fix: Combine with implicit signals to find root causes. Review highly-downvoted conversations. A/B test response variations.

Beyond the six: Other patterns

These six types cover most failure patterns in conversational AI. But new issues emerge: novel failure modes, UI bugs, or unexpected AI behaviors that don't fit existing categories. Tracking these as "other" serves as an early warning system for patterns that might need their own category later.

Why categorizing failures matters

Carnegie Mellon research shows AI agents succeed only 30-35% of the time on multi-step tasks. The question isn't whether your AI will fail. It's whether you can see how it's failing and fix what matters most.

Different industries see different failure distributions. In financial services, task failures often dominate because users need complete, precise answers—partial information isn't just unhelpful, it's unusable. In customer support, unhandled requests tend to spike because policy boundaries are strict and users test them constantly.

Temporal patterns matter too. Right after launch, off-topic drift and user frustration tend to run high as users explore boundaries. After a few weeks, as guardrails tighten, task failures and language problems often become bigger issues.

The Failure Intelligence dashboard tracks these patterns automatically. You can see failure volume by type over time, filter by conversation topic to understand which parts of your product break most, and correlate failures with user retention to measure business impact.

One consistent finding across conversational AI: thumbs down alone doesn't give you the full picture. If you're only looking at explicit feedback, you're missing the majority of problems. The rest shows up as frustration signals, repeat queries, and silent drop-offs.

What this means for your business

McKinsey's research shows only 6% of companies see significant enterprise-wide value from AI. Part of the gap is invisible failures—your infrastructure logs say everything's fine, but users are leaving anyway.

In regulated industries, these failures carry extra weight. In finance, healthcare, or insurance, unhandled requests aren't just user experience problems—they're compliance risks. If your AI refuses to answer a covered question, that could be a regulatory issue.

Fixing these failures is also a cross-functional effort. Unhandled requests need product and policy teams. Task failures need engineering and data. User frustration needs UX. The taxonomy makes ownership clear.

What you should do about it

If you're running an AI product in production or planning to launch one:

Map your current data to the error types.

You're already logging error rates, user feedback, or drop-offs. Take a week's worth of data and manually categorize a sample. You'll quickly see which types dominate your product.

Build a dashboard and review it weekly.

Track failure volume by type, failure rate by conversation topic, and correlation with retention or task completion. Make this a standing agenda item in product or eng reviews. The Nebuly dashboard does this automatically.

Pick one high-volume type and run experiments.

If unhandled requests are your biggest problem, expand policy or add a tool and measure before/after. If task failures dominate, improve data coverage or RAG ranking. Fix one, measure impact, move to the next.

The shift to conversational interfaces is real. The analytics layer for understanding them is still being figured out. But if you can't see how your AI is failing users, you can't fix it.

The Nebuly Failure Intelligence dashboard automatically categorizes every conversation into these error types, surfaces patterns by topic and time, and shows you which failures correlate with drop-off. We built it because teams had infrastructure monitoring and product analytics, but no way to understand conversational failure at scale. This is the layer in between. Try it yourself or book a demo to understand how the different error types impact your use case.

.png)