A top-tier bank with over 80,000 employees launched a GenAI program to boost internal productivity. What started with copilots for traders and equity research quickly expanded to HR, finance, and legal teams, each using their own assistant.

The challenge was clear: thousands of employees were interacting with AI tools across departments, but the bank had no visibility into how these conversations were actually going.

Why they needed visibility from the start

Traditional risk management wasn't built for conversational AI. The bank faced three critical blind spots.

→ First, employees were unknowingly including personal and confidential information - including PII - in prompts. Without monitoring, sensitive data exposure could happen at scale.

→ Second, users asked questions outside policy boundaries with no system to flag risky behavior. In a regulated environment, this created compliance gaps.

→ Third, the AI sometimes returned incorrect or misleading information, especially in complex financial scenarios. These hallucinations created confusion and risk in regulated functions.

Real-time insights that changed everything

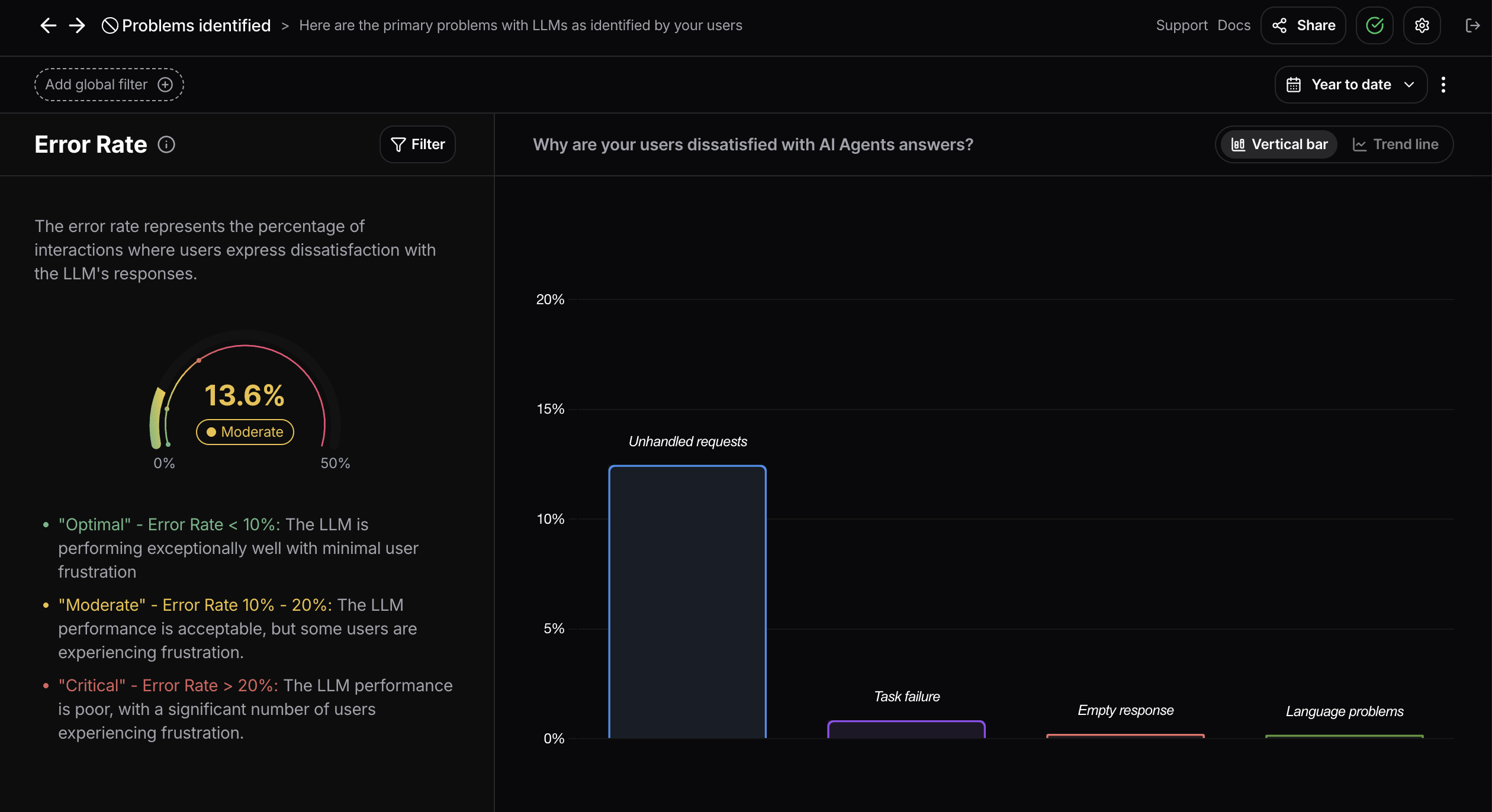

Nebuly's deployment within the bank's VPC provided immediate visibility across all departments. The analytics revealed patterns that would have taken months to surface through traditional methods.

Risk flagging identified prompts containing PII, restricted terms, and non-compliant usage in real-time. The team could now spot issues before they escalated.

Failure detection surfaced wrong or hallucinated answers before user trust eroded. This became crucial for maintaining confidence in AI tools across sensitive departments.

Most importantly, they could track how different departments used their copilots, revealing distinct conversation patterns between traders, HR staff, and legal teams.

Early impact on operations

The results were immediate. Risk detection prevented dozens of potential compliance violations in the first 60 days.

User behavior analysis showed that legal teams had the highest error rates, but not due to technical issues. The AI needed better training on regulatory language and legal terminology.

HR teams showed the strongest adoption, with consistent daily usage and high satisfaction scores. This insight helped the bank expand similar tools to other people-focused departments.

What this means for other financial institutions

Start with visibility. When you deploy GenAI tools in regulated environments, monitoring isn't optional. Understanding how employees interact with AI tools helps you manage risk while enabling productivity.

The bank here avoided potential compliance issues by seeing the full picture of employee AI usage, not just system performance metrics.

Want to see how your organization's GenAI tools are being used? Book a demo to see how Nebuly provides real-time visibility into user conversations while maintaining strict data privacy and compliance standards.

Read more Gen AI adoption stories:

- #1: What user analytics revealed about a GenAI copilot rollout