Traditional return on investment formulas were designed for straightforward automation, not for generative AI.

They struggle to capture the way a model quietly supports existing work, delivers benefits over long timeframes and improves decision making or knowledge access.

Research from McKinsey notes that generative AI can revolutionize internal knowledge management by allowing employees to ask questions in natural language, quickly retrieve stored information and make decisions faster.

These improvements are hard to quantify with a single number.

Why standard ROI misses the mark

Simple metrics such as sign-ups or total prompt volume do not confirm value.

McKinsey describes how generative AI increased issue resolution by 14% per hour in a customer operations study and reduced handling time by 9%.

These gains are only visible when you compare against pre-deployment baselines and combine multiple signals. Many benefits are qualitative. Experts advise tracking customer effort and agent satisfaction because qualitative improvements tell a powerful story.

Enterprise AI programs therefore need a broader framework that covers financial, operational and behavioral signals.

A three layer approach to GenAI ROI measurement

Financial signals

Start by converting time saved into cost savings.

In customer support, key metrics include ticket deflection rate, first contact resolution and average handle time. You can calculate agent productivity gains by multiplying the time saved per ticket by the number of tickets.

Multiply deflected tickets by the average ticket cost to estimate monthly savings.

For internal copilots, the equivalent metrics include hours saved when employees retrieve information more quickly, reduction in escalations and errors and decreases in reliance on paid tools.

Operational signals

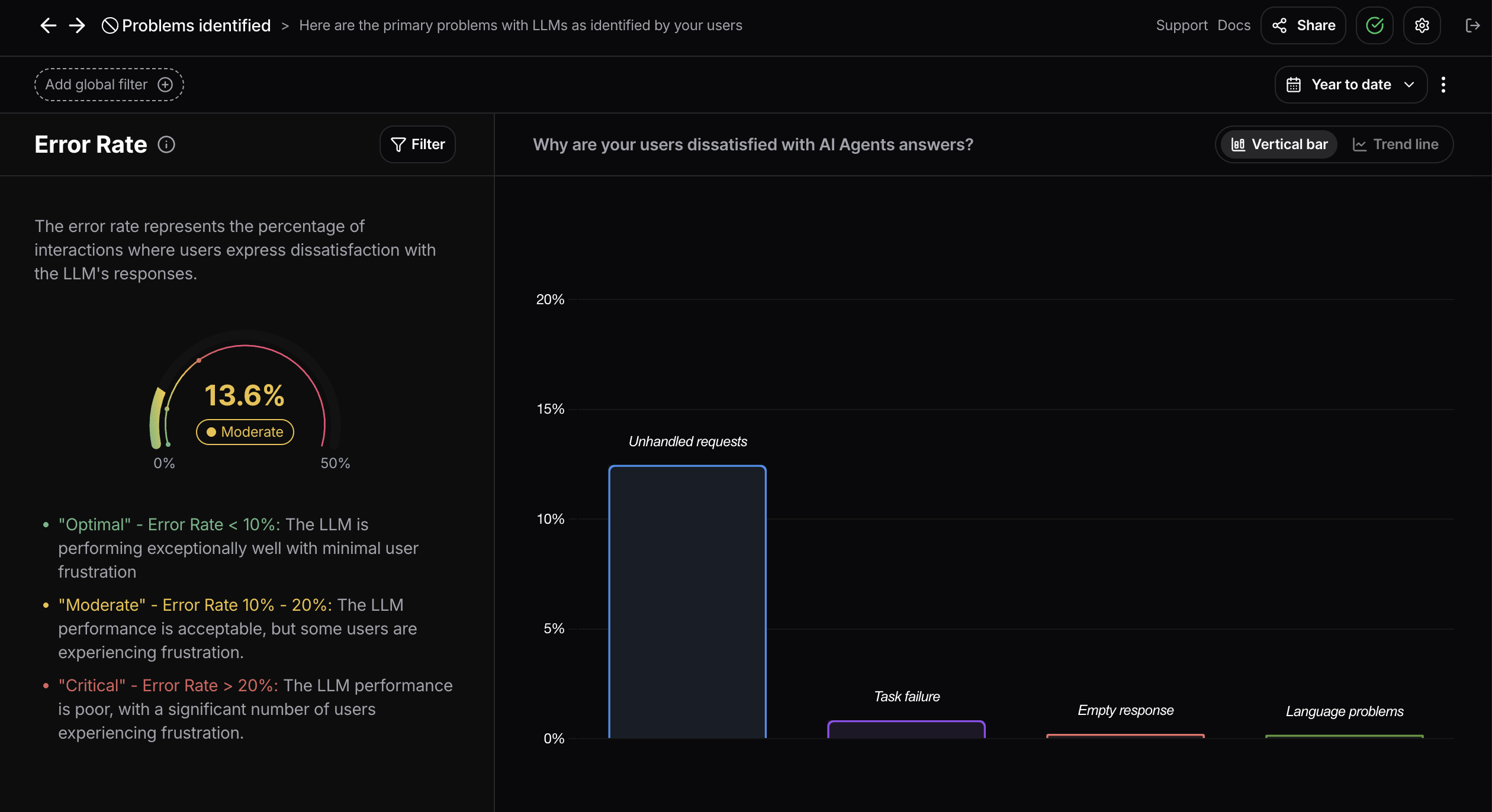

Operational metrics track the health of the model and its impact on workflows.

Nebuly’s guide to LLM monitoring emphasizes latency, throughput, accuracy and resource usage. Models that respond quickly and provide relevant answers keep employees productive; slow or off topic outputs erode trust.

User experience metrics such as sentiment, engagement and conversation flow are equally important. A model might be accurate yet still frustrate users if responses are confusing or the conversation stalls.

Product teams should therefore measure how quickly teams complete common tasks, whether adoption reduces reliance on other tools and whether the model’s suggestions improve speed or accuracy.

Behavioral signals

Generative AI products succeed when people embed them in their workflow.

Nebuly’s product usage analytics framework, for example, focuses on identifying user intent, mapping conversation flows and tracking emotional cues. It also distinguishes between engaged and frustrated users.

Behavioral metrics include retention, repeat usage and task completion rates.

→ Are users coming back to the internal copilot for help? Do they finish tasks through the assistant rather than switching back to old tools?

A deep analytics platform can analyze implicit feedback, such as follow up questions or copy and paste actions, because explicit ratings are rare.

Lessons from the field

Real examples show how a multi layered approach reveals value.

Our case studies illustrate this pattern. Iveco rolled out an internal copilot to help employees retrieve procedures and documentation. By using Nebuly to consolidate insights, it generated more than 100x more feedback data and provided a clear view of adoption, satisfaction and usage patterns (read the case study).

TextYess, a fast growing shopping assistant, used Nebuly to flag poor responses and identify common topics. The team saved 90% of their analysis time and reduced negative interactions by 63% (read the case study).

These stories show how combining financial, operational and behavioral signals drives continuous improvement.

To explore more examples across different industries, visit our case studies collection.

Making ROI visible

Before deploying an internal copilot or chatbot, establish a baseline for existing workflows by measuring how long it takes to answer a query, resolve a support ticket or complete a procedure.

Once the model is live, track behavioral signals, not just overall usage.

Build structured feedback into the interface; ask for quick ratings, but also analyze implicit feedback such as repeated questions or copy actions.

Use analytics to map friction points and successes.

Over time, compare financial metrics like hours saved and escalation rates against the baseline.

Finally, close the feedback loop by using these insights to refine the model and test improvements.

More than a single number, business value from generative AI is a pattern of financial savings, operational efficiency and behavioral adoption. By measuring all three and by creating a continuous feedback loop, enterprises can prove and improve the return on their GenAI investments.

To dive deeper into user analytics for LLMs, explore our guides on product usage analytics, LLM monitoring and LLM feedback loops. These resources explain how to map conversation flows, track sentiment and close the feedback loop for continuous improvement.

Want to see what 100x more feedback data looks like in practice? Book a demo or try the Nebuly Playground to discover how our user analytics platform can help you measure and improve the return on your GenAI initiatives.