Artificial Intelligence is advancing rapidly, and the latest releases from Meta and Mistral, LLAMA 3.1 and Mistral Large 2, showcase significant progress in the field of Large Language Models (LLMs). These models have unique features and strengths that make them stand out. In this post, we'll explore the technical specifics of each model, discuss the companies behind them, and provide a comparative analysis to help you understand their differences and suitable use cases.

Technical Overview

Mistral Large 2

Release Date: July 24, 2024

Company: Mistral

Parameters: 123 billion

Licensing: Open for non-commercial research; commercial use requires a separate license

Context Window: Expanded to 128,000 tokens

Multilingual Capabilities: Excels in English, French, German, Spanish, Italian, Portuguese, Dutch, Russian, Chinese, Japanese, Korean, Arabic, and Hindi

Performance: Comparable to top models like GPT-4 and Claude 3.5, despite having fewer parameters

Application: Suitable for tasks requiring high throughput, advanced reasoning, and code generation

Meta's Llama 3.1

3.1 is an update to the model LLAMA 3.

Release Date: July 23, 2024

Company: Meta (formerly Facebook)

Parameters: 405 billion, has also 8B and 70B variants

Licensing: Open-source, allowing developers to use outputs for further model improvement

Context Window: 128,000 tokens

Multilingual and Multitask Capabilities: Excels in general knowledge, steerability, tool use, and multilingual translation

Training Scale: Trained on over 15 trillion tokens with optimized infrastructure for efficient performance

Application: Suitable for long-form text summarization and multilingual conversational agents

The Companies Behind the Models

Meta

Meta, formerly known as Facebook, has been a major player in AI research for years. Their AI research lab, FAIR (Facebook AI Research), is renowned for producing cutting-edge models that are versatile and widely applicable. Meta leverages its extensive resources and vast data to train large-scale models like LLAMA 3.1, aiming to create AI tools usable across various domains. Unlike its competitors OpenAI and Anthropic, Meta's strategy with LLMs has been to publish their models with an open-source license. Meta's Chief AI Scientist, Yann LeCun, is one of the most well-known thought leaders in AI and often debates about the different AI approaches on Twitter/X. Meta's CEO, Mark Zuckerberg, has openly stated the tens of billions of dollars they are investing in GPUs for further model training. We expect Meta to remain a key player in the LLM field long into the future.

Mistral

Mistral is a relatively new but rapidly growing company in the AI industry, known for its focus on high-quality and specialized AI models. Mistral’s rigorous approach to training, data curation and model fine-tuning has positioned it as a strong competitor, especially in technical and scientific applications. As one of the few major AI names from Europe, Mistral is headquartered in Paris, France. In June 2024, Mistral raised 600 million euros in financing to expand. While Mistral’s growth is impressive, and their partnerships with Enterprise companies make them a credible player, we expect them to continue to focus more on specialized and customized language models. In the world of LLMs, resources are key, and Mistral will find it hard to compete with the tens of billions U.S. tech giants are investing in training their flagship general-purpose large language models.

Comparative Analysis

Model Size and Architecture

LLAMA 3.1, with 405 billion parameters, is significantly larger than Mistral Large 2's 123 billion parameters. The larger parameter count of LLAMA 3.1 allows for more complex model behavior and nuanced language understanding, making it suitable for a broader range of tasks.

Language and Domain Proficiency

Mistral Large 2 excels in multilingual and in technical domains. Its performance is comparable to top models like GPT-4 and Claude 3.5, despite having fewer parameters. This makes it particularly effective for tasks requiring advanced reasoning and technical knowledge, such as code generation.

LLAMA 3.1, with its extensive training on over 15 trillion tokens, offers great performance in general knowledge, multitasking, and multilingual translation. It is designed to handle long-form text summarization and conversational agents with high efficiency, making it a versatile tool for various applications.

Efficiency and Accessibility

LLAMA 3.1's open-source nature and optimized infrastructure for efficient performance make it an accessible option for developers and researchers. Its large parameter count and extensive training allow it to perform well even on general-purpose tasks.

Mistral Large 2 focuses on cost efficiency and speed, making high-performance AI more accessible and practical for tasks requiring high throughput. Its expanded context window and multilingual capabilities make it suitable for applications that demand detailed and contextually accurate outputs.

Suitable Use Cases

LLAMA 3.1:

- Long-form text summarization

- Multilingual conversational agents

- General knowledge and multitasking applications

- Educational tools requiring broad language support

Mistral Large 2:

- Technical documentation and academic research

- Code generation and debugging assistance

- Specialized industry applications (e.g., healthcare, engineering)

- High-throughput tasks requiring advanced reasoning

Conclusion

Both LLAMA 3.1 and Mistral Large 2 represent significant advancements in LLMs, each with its own strengths and ideal use cases. LLAMA 3.1, with its open licensing, enhanced multilingual capabilities, large size and extensive training, is a versatile choice for general-purpose applications. Mistral Large 2, with its performance in technical domains with great cost/ performance efficiency, is better suited for specialized tasks requiring deep expertise.

Choosing between these models ultimately depends on your specific requirements. For broad, multilingual applications, where you want a (closer to) fully open-source model, LLAMA 3.1 is likely the better fit. For specialized, technical, or research-focused tasks, Mistral Large 2 can be the right choice.

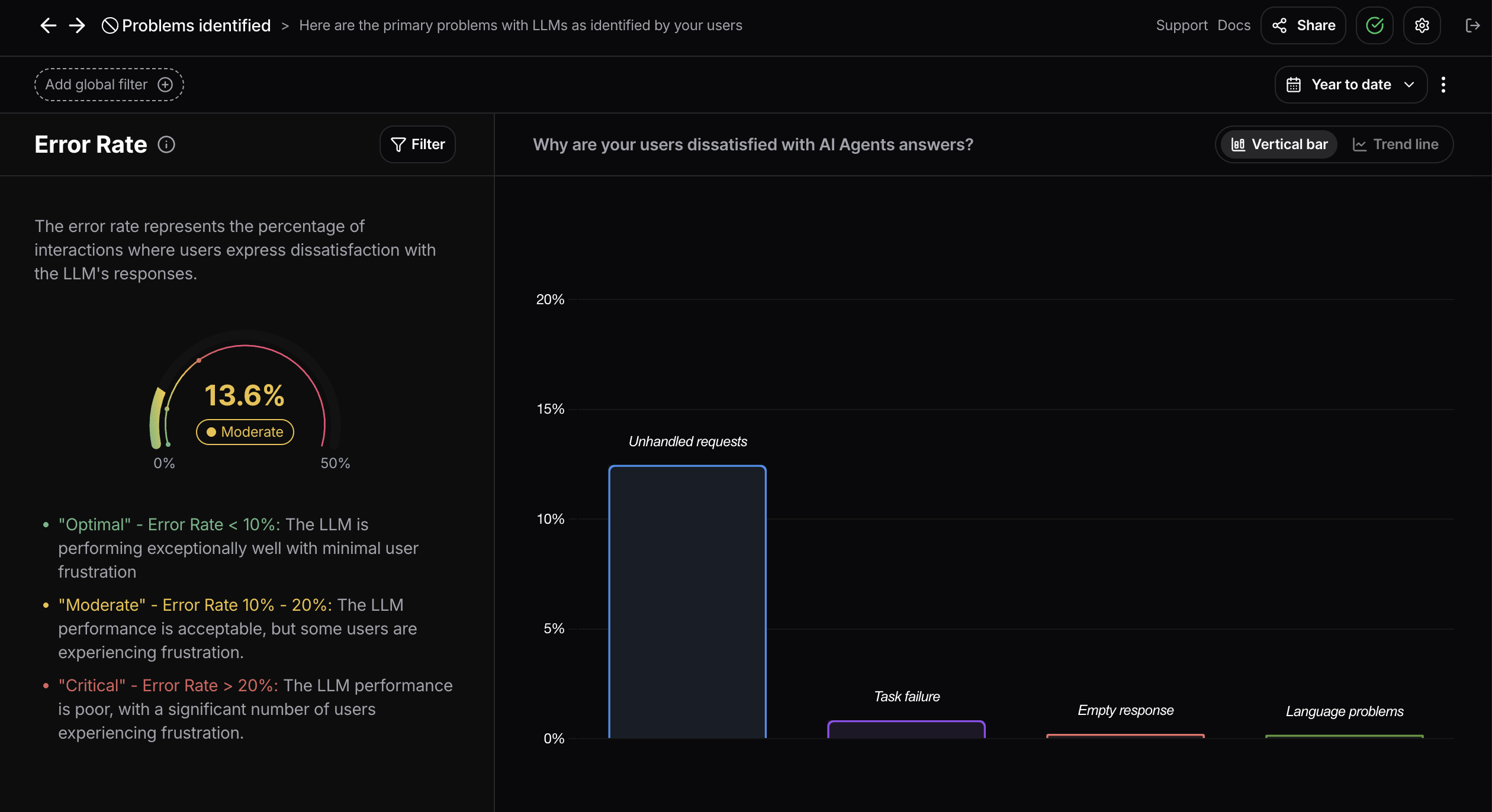

About Nebuly

Nebuly is an LLM user analytics platform. With Nebuly, companies capture valuable usage insights from LLM interactions and continuously improve their LLM-powered products. If you're interested in enhancing your LLM user-experience, please schedule a meeting with us today HERE.