A comprehensive glossary of essential Large Language Model (LLM) and generative AI terminology. Includes user analytics terms, observability concepts, and the most common models in enterprise environments. Updated for September 2025.

User analytics terms

- LLM user analytics: Analytics frameworks designed specifically for conversational interfaces powered by LLMs. Includes metrics such as topics, drop-offs, risky prompts, and frustration detection.

- Topic analysis: Identifying and categorizing what users are talking about in their interactions. Comparable to “page views” in web analytics.

- Risky behavior analysis: Detecting prompts and outputs that involve sensitive data, compliance breaches, or malicious use. Important for enterprises in regulated industries.

- Intent achievement rate: Percentage of interactions where users achieve their stated goals.

- Conversation completion rate: Percentage of sessions that end with a meaningful outcome instead of abandonment.

- Rephrasing frequency: How often users need to restate or clarify prompts. High rates show poor model understanding.

- Return rate: Percentage of users who return after their first session.

- Retention rate: How many users continue engaging over time compared to those who drop off.

- Topic distribution: Spread of subjects users bring into Gen AI conversations. Shows actual demand patterns.

- Sentiment signals: Measurement of positive, neutral, or negative tone in user conversations.

- Abandonment points: Stages in a conversation where users drop out.

- Journey mapping: Step-by-step analysis of conversation paths to identify friction.

- Role-based success rates: Adoption and success rates segmented by user roles or functions.

Observability terms

- Observability: The practice of monitoring technical performance of AI systems. Complements user analytics by focusing on infrastructure and system health.

- Latency: Time taken by a model to return a response. A core system metric for responsiveness.

- Throughput: The number of queries a system can handle in a given period. Used to evaluate system capacity.

- Token usage: The count of tokens processed by a model. Essential for monitoring costs and efficiency.

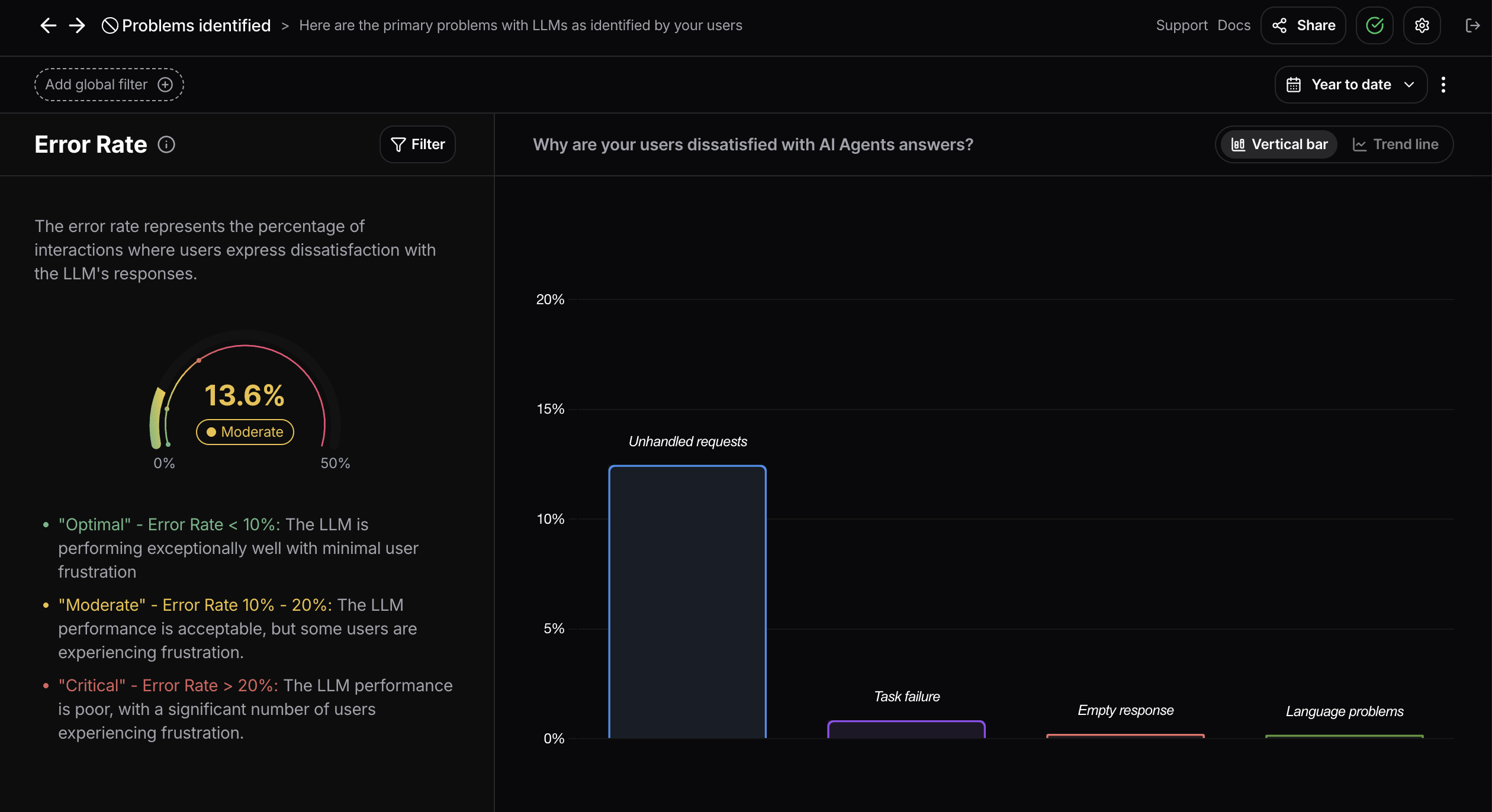

- Model error rate: Percentage of system-level failures such as timeouts, crashes, or invalid responses.

- User error rate: Percentage of wrong or misleading outputs that cause users to abandon or rephrase tasks.

Agentic AI concepts

- Agentic AI: An approach where LLMs are structured as autonomous agents that can plan, act, and iterate toward a goal. Instead of only responding to prompts, agentic systems break tasks into steps, call tools or APIs, and adapt based on outcomes.

- AI agent: A modular system powered by an LLM that can perform tasks independently. Agents typically combine reasoning, memory, and external tool use.

- Agent orchestration: The process of coordinating multiple agents to work together on complex workflows, often with a central controller managing dependencies and results.

- Memory in agents: Persistent storage of past interactions that allows agents to recall context across sessions. Memory can be short-term (within a task) or long-term (across multiple tasks).

- Tool use / function calling: A core capability of agentic systems where an LLM calls external functions, APIs, or software tools to retrieve data, trigger actions, or complete tasks.

- Planning and reasoning: Techniques that let agents decompose large problems into smaller steps, execute them sequentially, and adjust plans if results are incorrect.

- Multi-agent systems: Environments where multiple agents collaborate or compete. Useful for simulations, negotiation tasks, or distributed enterprise processes.

- Agent alignment: Methods for ensuring that autonomous agents follow human-defined goals, comply with regulations, and avoid unintended behaviors.

- Evaluation of agents: Metrics beyond accuracy, including task completion rate, resource efficiency, safety, and robustness when operating independently in production.

Core LLM and generative AI terms

- Large language model (LLM): A neural network trained on large datasets to understand, generate, and manipulate human language.

- Transformer architecture: The foundation of modern LLMs. Uses self-attention to capture relationships across text sequences.

- Self-attention mechanism: Process that lets each token in a sequence attend to others, enabling context understanding.

- Tokenization: Breaking text into smaller units (tokens) for processing by an LLM.

- GPT (generative pre-trained transformer): OpenAI’s family of transformer models, including GPT-4o.

- Pre-training: The initial training phase where a model learns general patterns from large datasets.

- Fine-tuning: Adapting a pre-trained model with task-specific or domain-specific data.

- Context window: The maximum text length an LLM can handle in a single interaction.

- BERT (bidirectional encoder representations from transformers): Transformer model designed to understand context by reading text in both directions.

- Masked language model (MLM): Training method where words are hidden, and the model predicts them using surrounding context.

Learning and interaction methods

- Zero-shot learning: Ability of a model to perform tasks without prior training examples.

- Few-shot learning: Ability of a model to perform tasks after seeing a small number of examples.

- Prompt engineering: Crafting clear and effective prompts to improve model outputs.

- Natural language processing (NLP): Field of AI focused on processing and understanding human language.

- Sequence-to-sequence model: Model architecture mapping input sequences to output sequences, used in tasks like translation.

Model architecture components

- Decoder: Transformer component that generates text output.

- Encoder: Transformer component that processes input into a structured representation.

- Attention head: Sub-unit in self-attention that focuses on one part of the context.

- Multi-head attention: Parallel attention heads capturing diverse relationships.

- Position embedding: Representation of token positions to preserve word order.

- Embedding: Dense vector representation capturing meaning of words or phrases.

Training and optimization

- Transfer learning: Applying a model trained on one task to another related task.

- Backpropagation: Training algorithm that updates weights by calculating errors.

- Gradient descent: Optimization method for minimizing prediction errors.

- Overfitting: When a model performs well on training data but poorly on new data.

- Underfitting: When a model is too simple to capture patterns in data.

- Hyperparameters: Settings like learning rate or batch size defined before training.

- Epoch: One full pass through the training dataset.

- Batch size: Number of samples processed in one training iteration.

Evaluation and performance

- Perplexity: Metric for how well a model predicts text. Lower scores indicate better performance.

- Beam search: Text generation method that considers multiple possible outputs.

- Inference: Using a trained model to generate predictions on new inputs.

- Natural language generation (NLG): Producing human-like text from structured inputs or prompts.

- RLHF (reinforcement learning from human feedback): Training technique that uses human ratings to improve alignment.

Advanced concepts

- Autoencoder: Neural network that compresses and reconstructs input data.

- Cross-entropy loss: Function that measures prediction accuracy in classification.

- Knowledge distillation: Teaching a smaller model to replicate a larger model’s performance.

- Latent space: Abstract space where models represent learned features and patterns.

- Multimodal models: Models that process multiple input types such as text, images, and audio.

GenAI models

- ChatGPT (GPT-5): OpenAI’s multimodal model. Widely used for support, productivity, and content tasks.

- Claude (Anthropic): Safety-focused model with large context windows and reliable outputs.

- Google Gemini: Multimodal model suite integrated across Google Workspace.

- Microsoft 365 Copilot: Productivity copilot embedded into Microsoft Office applications.

- Mistral: Open-source LLM family optimized for efficiency and fine-tuning.

- Llama (Meta): Open-source model suite widely used for enterprise on-premises deployments.