OpenAI and Anthropic have just released unprecedented reports showing how people are actually using AI.

OpenAI's study analyzed 1.5 million ChatGPT conversations from consumer users and found adoption has broadened beyond the tech-savvy, with usage creating economic value in both work and personal life.

The study revealed that about 49% of conversations involve "asking" for information, 40% involve "doing" tasks, and the remaining focus on creative expression. Anthropic's AI Economic Index revealed that AI usage, while spreading, is still concentrated in knowledge-economy hubs and specific professions.

These public insights confirm that AI is delivering real value. Anthropic's data shows a rising tendency to fully delegate tasks to AI among their API customers (primarily businesses and developers): "directive" automation grew from 27% to 39% of all Claude conversations over nine months, and a striking 77% of enterprise API usage involves full task automation.

Those findings are eye-opening. But they also raise a crucial question for any organization building with AI: What about your users? If you've deployed an internal AI copilot or a customer-facing generative assistant, do you know how it's actually being used? Where are users finding value, and where are they getting frustrated or dropping off? In other words, how do you measure the economic index of AI usage inside your own company?

From global trends to your company's AI index

The reports from OpenAI and Anthropic offer a macro lens on AI adoption. They highlight broad patterns – like AI usage booming for everyday productivity tasks, or adoption clustering in high-tech regions. They even reveal how enterprise API users differ from consumers, preferring to delegate complete tasks to AI far more often. These insights are extremely valuable at a societal level. They show where AI is working and hint at where it isn't.

Now imagine having the same kind of insight within your organization. If AI is the new engine of productivity, you need a dashboard for that engine. User analytics for GenAI provides exactly that: a specialized analytics approach that gives you a real-time read on how people engage with your AI tools. It's essentially your own AI economic index, but focused on your users and use cases.

Why is this internal view so important?

Because without it, companies are flying blind after they deploy an AI solution. You might know how often the system is used or whether it's technically running without errors – but traditional analytics and observability tools don't show the human side. Conversational AI requires its own kind of analytics.

Typical web or app metrics miss the nuances of a dialogue: the intent behind a user's question, the context of their request, whether the AI's answer satisfied them or caused frustration. Without visibility into these dynamics, it's hard to improve the product in meaningful ways. In short, broad usage stats alone won't tell you if your AI is actually helping users achieve their goals.

- Would you like to see how user analytics tracking works in practice? Try Nebuly in our free playground. No sign-up needed

Your own "AI economic index" for GenAI tools

User analytics platforms are built to fill this gap by analyzing how users interact with generative AI in depth. Think of it as creating an internal AI usage report for your company, similar to what OpenAI and Anthropic did externally. But instead of nationwide trends, you get insights tailored to your application, whether it's an employee-facing chatbot, a customer support assistant, or any LLM-powered feature.

What kind of insights are we talking about? Specialized user analytics captures the rich signals embedded in conversations that typical analytics would overlook:

Topic analysis and user intent: What are people trying to accomplish with your AI tool? Are they asking for policy advice, product recommendations, coding help, or something else entirely? Much like OpenAI's report categorized usage into Asking vs. Doing, user analytics can identify the intents behind user queries and automatically categorize conversation topics. For example, you might discover that 40% of interactions with your internal assistant are employees seeking procedural guidance, while 30% are attempts to offload tedious tasks. This topic analysis helps you prioritize which capabilities to improve or expand.

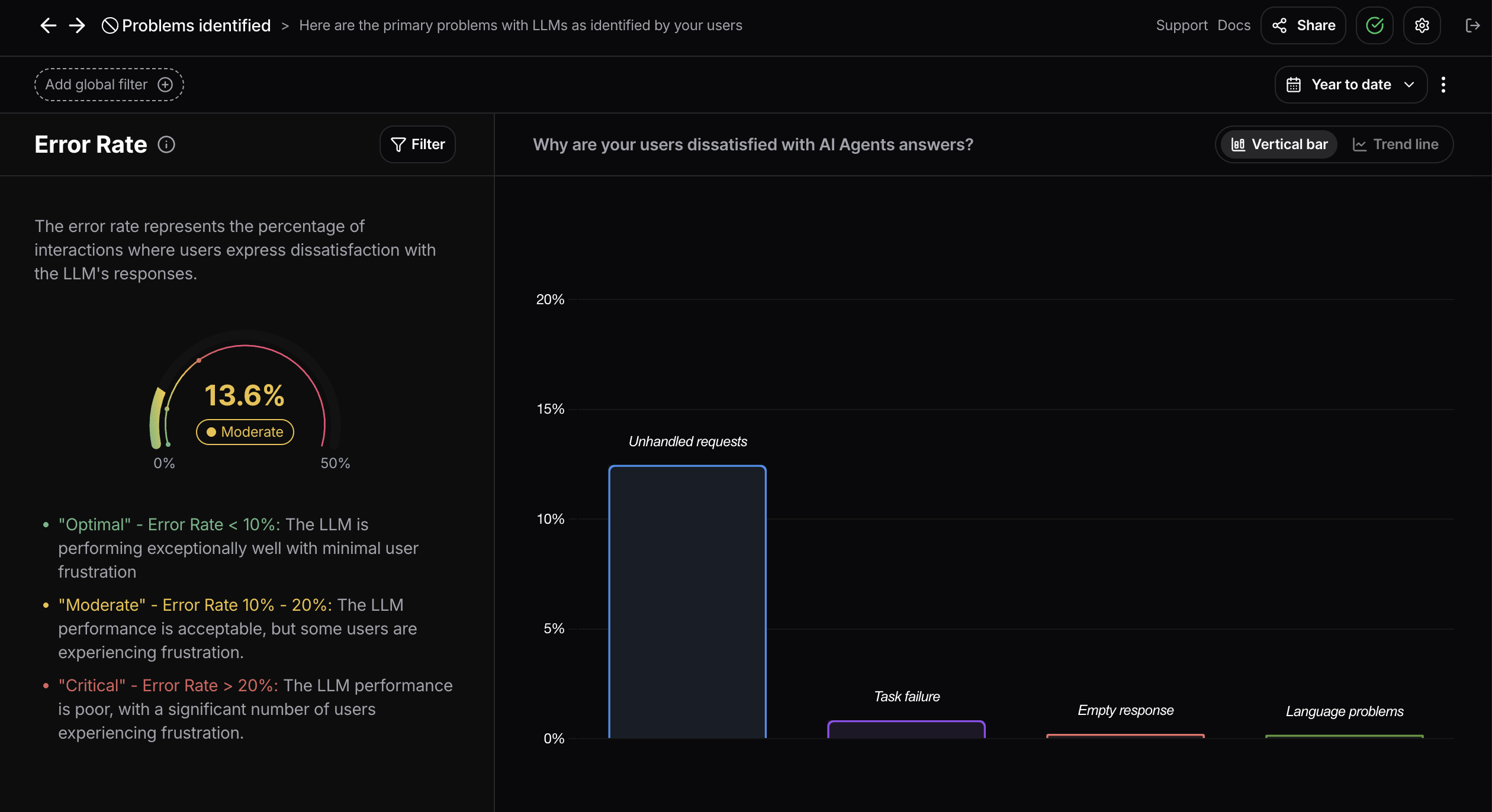

Error rates from the user perspective: Beyond technical system errors, user analytics tracks when the AI provides wrong answers, misses context, or fundamentally misunderstands what users are asking. These user-facing errors are critical because they directly impact trust and adoption. You can measure how often users receive responses that don't match their intent, get outdated information, or encounter AI responses that seem confused about the conversation context.

Retention rates and drop-offs: An "AI economic index" isn't just about volume of usage, but also about where users give up and whether they come back. User analytics tracks both immediate drop-off rates in conversational funnels and longer-term retention patterns. For instance, if many users quit the chat after a certain prompt or after the AI's lengthy response, that's valuable information. Perhaps the answer wasn't relevant, or it took too long to get to the point. You can also see if users who have poor experiences return to use the system again, helping you understand the lasting impact of AI interactions.

Risky behavior detection: User analytics can identify potentially problematic interactions such as users attempting to extract sensitive information, bypass safety guidelines, or engage in inappropriate conversations. This risky behavior rate helps organizations maintain compliance and safety standards while understanding patterns that might require additional guardrails or user education.

User satisfaction beyond explicit feedback: While explicit feedback like thumbs-up/down ratings would be ideal, the reality is that less than 1% of users typically provide this direct input. User analytics fills this gap by inferring satisfaction through implicit signals such as users copying the AI's answer (indicating usefulness), asking follow-up questions that build on the response (showing engagement), or immediately switching to alternative methods after an interaction (suggesting frustration). By aggregating these signals, user analytics gives you a satisfaction score for your AI interactions that reflects the true user experience.

In essence, user analytics turns the raw conversational data into actionable intelligence. It's not just that "X thousand questions were asked this week." It's what kinds of questions (topic analysis), how many led to successful outcomes (retention and satisfaction rates), what percentage resulted in poor experiences (error rates), and whether any interactions raised red flags (risky behavior rates).

Armed with this comprehensive view, your team can continuously improve the AI assistant – just as product teams iterate on apps using user behavior data.

- Interested in seeing how it would work for your use case? Book a demo

Why GenAI user analytics is a new (and necessary) layer

It's worth emphasizing that GenAI user analytics is a new discipline, distinct from both traditional web analytics and from AI system monitoring. Many organizations initially assume that either their existing analytics stack or their model observability tools will cover AI usage. In practice, neither gives a clear picture of the user experience:

Not just observability: AI observability tools track things like model uptime, latency, or prompt failure rates. That's important for engineers, but it doesn't tell you anything about whether users are getting value. Your system could be 99.9% uptime and hitting low error rates, yet users might still be unhappy with the answers. Observability watches the machine, whereas user analytics watches the human-machine interaction. For example, observability might ensure your copilot's API calls succeed; user analytics will tell you if the conversation succeeded or if the user walked away frustrated.

Beyond clickstreams: Traditional product analytics (web or mobile) look at page views, clicks, and funnels. But a conversation isn't a series of page clicks – it's a nuanced, branching interaction. Key information lives in the text of messages and the tone of the exchange. Did the user say "Thanks, that answers my question" or did they ask the same thing twice in different words? These are the kinds of events user analytics captures, which Google Analytics or Mixpanel simply aren't built for.

The creation of an "AI user intelligence" layer is quickly becoming recognized as essential. Industry analysts and forward-looking companies are already talking about the need for feedback and monitoring specifically around AI usage. Early adopters of GenAI analytics are gaining a competitive edge by iterating faster on their AI products, because they're guided by real user data rather than guesswork. It's no longer optional – if you want your AI initiative to succeed, you need to understand your users' interactions with it.

Just as every modern web service relies on user analytics to improve, AI products demand their own analytics layer. Deep user understanding is now mandatory for AI success.

Turning user insights into real outcomes

What does this look like in practice? Here are real examples of how companies have leveraged user analytics to transform their AI implementations and drive measurable improvements:

Top-tier global bank governs enterprise AI usage: A Fortune 500 global bank with over 80,000 employees launched a GenAI program that quickly expanded from traders and equity research teams to HR, finance, and legal departments. User analytics immediately revealed critical risks: employees were unknowingly including PII in prompts, asking questions outside policy boundaries, and receiving incorrect or misleading information that created confusion in regulated functions. The platform's real-time risk flagging identified prompts containing sensitive data and non-compliant usage, while failure detection surfaced wrong or hallucinated answers before user trust was lost. This visibility enabled the bank to maintain strict compliance standards while scaling AI adoption across thousands of employees, with all analytics deployed within their secure infrastructure VPC.

Global manufacturer enhances dealer support: A Fortune 500 company rolled out a GenAI copilot to support thousands of equipment dealers worldwide with technical maintenance information. User analytics revealed striking adoption patterns: one dealer group achieved 400+ unique users in just 40 days, while other branches showed minimal engagement. More critically, the data exposed that error rates were significantly higher in Latin America and Europe, and, by filtering performance data by language, the team discovered that non-English queries had much higher failure rates than location-specific issues. This insight immediately shifted their development priorities toward multilingual performance improvements and enabled targeted outreach to underperforming branches.

European media company optimizes financial AI assistant: A large media organization building a financial AI assistant for subscribers used analytics from day one to shape development around real user behavior. Early usage patterns showed users primarily sought specific stock information, current market updates, and explanations of complex financial instruments. This behavioral data allowed the team to focus development on actual user needs rather than assumptions.

Global manufacturer scales internal productivity copilot: A Global 2000 manufacturer with 35,000+ employees deployed GenAI assistants for operational knowledge and internal documentation. User analytics provided clear insights into which use cases were emerging organically and where AI performance was falling short. This visibility significantly reduced the reporting burden on AI teams and enabled stakeholders to make data-driven decisions about scaling and improving their AI investments.

These examples demonstrate a consistent pattern: user analytics transforms AI deployments from black boxes into transparent, optimizable systems. Organizations gain immediate visibility into adoption patterns, performance gaps, and user satisfaction, enabling rapid iteration and targeted improvements that drive real business outcomes.

The human side of AI success

The examples above also underscore that the human side of AI use matters just as much as the technical side. It's not enough that an AI model is powerful or that your infrastructure is scalable. What determines success is how people engage with the AI: their intentions, their frustrations, their satisfaction. User analytics platforms are built on the conviction that understanding those human signals is key to getting real value from AI.

Public reports like the Anthropic Economic Index and OpenAI's ChatGPT study have started to quantify the macro impact – showing that AI can boost productivity and is being adopted widely. User analytics brings that same kind of lens to the micro level, within the walls of your company (or within your user base). It gives you the power to answer questions like:

- Which use cases should we double down on? (Maybe most employees use the AI for brainstorming, suggesting you invest in making that experience seamless.)

- Where are we losing users? (Perhaps a significant percentage of sessions end after a particular error or confusing answer – a sign to fix that immediately.)

- How is AI changing our workflows? (You might discover that some teams have automated routine tasks entirely, while others only use the AI for suggestions. Such insights can inform training and change management in your organization.)

- Are users satisfied with the AI's help? (Tracking a metric like "conversation success rate" or user satisfaction over time shows if you're genuinely improving the experience or if tweaks are needed.)

By treating your conversational AI data as a rich source of user intelligence, specialized platforms help ensure your AI initiatives drive real, measurable outcomes. It's about making the AI-user interaction loop visible and optimizable.

Enter Nebuly: Leading the user analytics revolution

This is where Nebuly comes in. Nebuly is the leading user analytics platform specifically designed for GenAI applications. While the market has various observability and traditional analytics solutions, Nebuly focuses exclusively on understanding how humans interact with AI systems.

Nebuly captures every aspect of the user-LLM interaction, from intent detection and satisfaction measurement to friction point identification and drop-off analysis. The platform provides the comprehensive user intelligence layer that companies need to optimize their AI experiences and prove business value.

What sets Nebuly apart is its purpose-built approach to conversational analytics. Rather than retrofitting traditional web analytics for AI use cases, Nebuly was designed from the ground up to understand the nuances of human-AI conversations. This includes natural language processing capabilities that can detect user frustration, identify successful task completion, and track conversation quality over time.

- Try Nebuly today: no sign-up needed

In the end, giving companies their own "AI economic index" means empowering them with knowledge: the knowledge of how their people and AI systems collaborate. That knowledge is a powerful asset. It leads to faster iteration, higher adoption, and more value from AI investments. As the world of conversational interfaces becomes ubiquitous, this kind of user-centric insight will be what separates AI projects that stagnate from those that succeed and keep improving.

Nebuly exists to make sure every company can achieve that success. With the right analytics, you're not guessing or going by gut feeling – you're learning directly from your users. Just like web analytics became indispensable for websites, GenAI user analytics is becoming indispensable for AI-powered products. The companies who embrace this will have a clear advantage: they will deliver AI experiences that truly resonate with users, driving productivity, satisfaction, and business value in the new age of AI.

Are you interested in learning more about how Nebuly can give your team actionable insights into your AI tool's usage? Book a demo today.