Up until a few months ago, I spent all of my career as a Services Architect at Dynatrace, helping some of the largest enterprises across EMEA set up their observability stack with Dynatrace. We spent a lot of time perfecting infrastructure metrics, application metrics, logging and tracing, but the end goal was always to link these signals to the actual user behavior. We called it Digital Experience Monitoring (DEM).

The user interfaces – web and mobile - were deterministic. We used JavaScript tags to capture concrete actions: page loads, button clicks, scrolls, and image rendering. If a user clicked "Checkout" and the API failed, we knew exactly why the experience broke and its impact on the business.

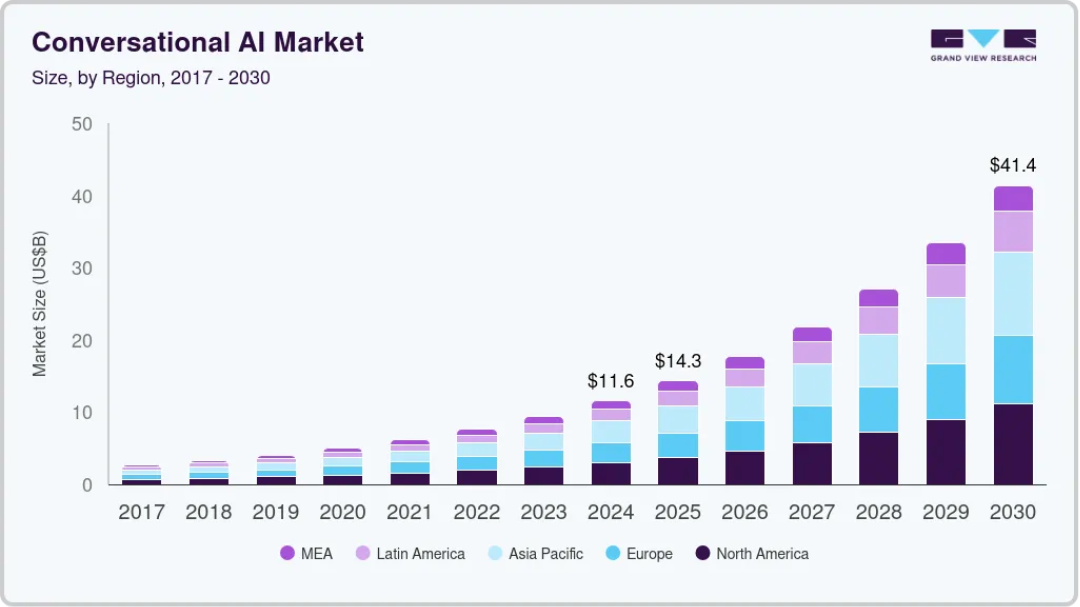

But slowly LLMs joined the game, and after the standard web and mobile interfaces, we’re seeing the conversational interfaces rapidly increasing in traction.

Why Observability is Indispensable and what it’s still missing

When GenAI emerged, the major observability providers adapted their existing strengths to this new technology – rightly so. They tackled the "infrastructure" of AI: Performance monitoring.

Today, tools (including Dynatrace) are fantastic at visualizing tokens, tracking latencies, calculating costs, as well as detecting and alerting on outages using open standards like OpenTelemetry.

This layer is indispensable. You must detect anomalies instantly. We need these tools to ensure the systems are up and running.

Still, it’s missing a bit: User Experience. Somehow, we forgot about this layer for LLMs, while acknowledging it’s the most important thing in our usual customer interfaces: mobile and web.

We can answer: "Is my chatbot fast and cost efficient?”

But we can’t answer: "How are my users interacting with the bot and is it helpful?" – which after all is why we’re adding GenAI to our user experience.

Not monitoring the content of your conversation is like ensuring same-day delivery without checking you have the right object in the package.

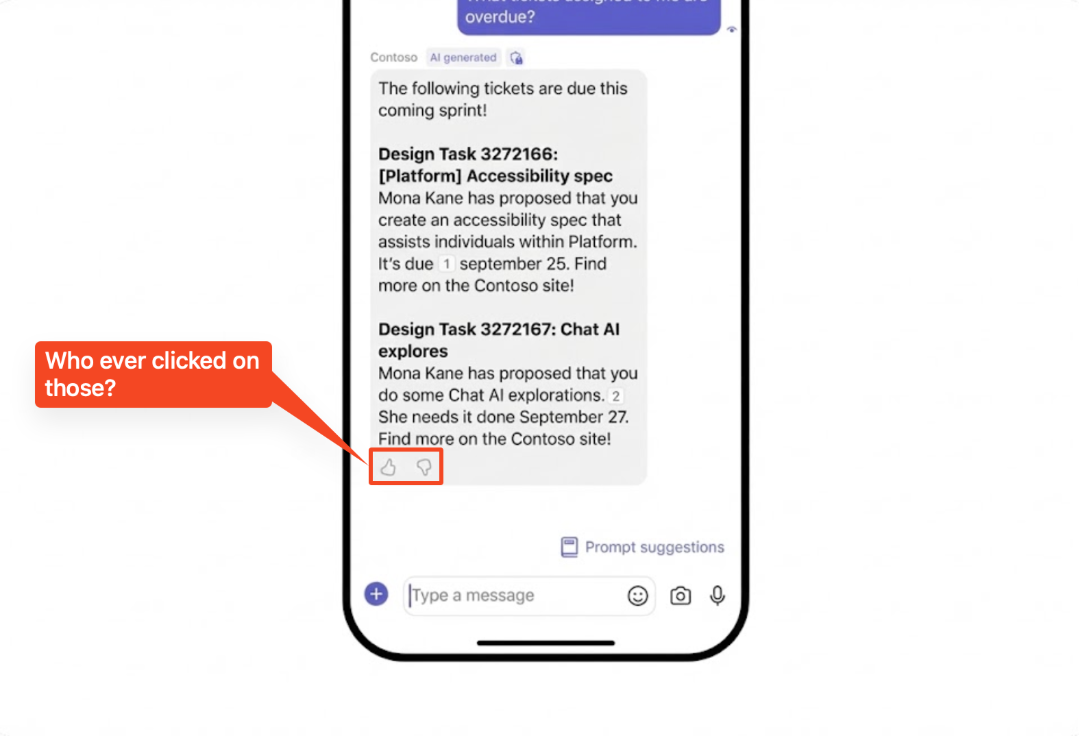

The Feedback Trap

The first instinct to close this visibility gap is usually to add thumbs up/down buttons or feedback boxes. I used to recommend sending feedback into Dynatrace as events and track those on dashboards. Theoretically a good idea, in practice less so… If you are waiting for your users to voluntarily tell you what is wrong with your product via a feedback box, you might as well wait forever.

As discussed, we want to monitor the user experience of our AI conversations.

So, what does this look like? Getting user insights from conversations?

Common approaches

I see companies trying to fill this gap in a few ways:

1. Manual Evaluations: Reading logs one by one. This works for 10 chats. It fails for 10,000.

2. Home-Grown Tooling: Engineering teams trying to build complex NLP pipelines instead of focusing on their core product.

3. Developer-Centric Tracing Tools (e.g., Langfuse/LangSmith): These are excellent for developers debugging specific traces or managing prompt versions, but they are designed for debugging code, not analyzing user behavior at scale.

4. GenAI user analytics: Let’s look into it.

GenAI User Analytics

Working at Dynatrace, I never heard of user analytics for GenAI and only got in touch with it through my job at Nebuly. It’s all about extracting behavioral insights from your conversation logs.

When users talk to your GenAI, they are giving you a goldmine of data: what they want, why they want it, and when they are frustrated. They give implicit feedback - for example by re-phrasing a prompt, or just by plainly insulting your bot (it happens more than you think).

What defines GenAI user analytics?

User Analytics for GenAI need to be able to give you the following:

- Topics & Intents: What are users actually trying to do?

- Business Risks: Which conversations reveal a risk to my business (can’t pay anymore, the product I wanted it gone, your page is slow).

- Implicit Feedback: Analyzing retry behaviors and frustration signals.

- Sentiment & Emotion: Are users leaving the chat happy?

- Failure intelligence: Which (sub-)topics have a high error rate (negative implicit feedback) and why? (e.g. was the question off topic, does my bot have difficulties with specific languages, or was the prompt just bad?)

At Nebuly, we aren't trying to replace your observability stack; we are completing it. We provide the dedicated user analytics layer that translates conversational chaos into structured business insights.

If you already have your infrastructure monitoring set up - great. You have the foundation. Now it’s time to turn the lights on and see what your users are actually doing.

If you’re interested in what we do, I suggest looking at our customer case studies.

But you can also see it live, in our public playground.

If you want a run through with myself or a colleague, you can book a demo right here.

My favorite blog post so far: User intent and implicit feedback in conversational AI: a complete guide

.png)