What Is a Churn Signal in an AI Agent Conversation?

A churn signal in an AI agent conversation is any user behavior or statement that indicates reduced commitment, active evaluation of alternatives, or unresolved frustration, without the user explicitly complaining or submitting formal feedback.

These signals are not caused by the AI agent. They reflect business-level problems, pricing concerns, competitive pressure, product dissatisfaction that users happen to raise inside an agent conversation. The agent conversation is the surface where the signal appears. Whether the business ever sees it depends entirely on whether anyone is analyzing those conversations at the task level.

A user typing "Your competitors offer better rates than you" into a customer service agent is not complaining about the agent. They are signaling a business-level retention risk. If the agent responds with a generic acknowledgment and the conversation closes, that signal is gone. If this pattern repeats across hundreds of conversations per week with no visibility into it, the business is operating blind on a measurable retention problem that is already in its data.

Unlike a support ticket or a thumbs-down rating, churn signals in agent conversations are implicit. They require reading conversation content at scale, not waiting for users to self-report.

Why Do Most Customers Churn Without Leaving a Signal Teams Can Act On?

According to research by thinkJar, 25 out of 26 unhappy customers leave without complaining. A separate analysis published by CRM Buyer (February 2026) found that traditional support models focus on the 10% of customers who open tickets, while the remaining 90% who encounter friction leave silently.

There are three reasons this happens:

Complaining has a cost. Opening a ticket, waiting for a response, and explaining a problem requires effort. For most users, finding an alternative is faster and easier than escalating a complaint.

Feedback mechanisms are opt-in. Thumbs-down buttons, CSAT surveys, and NPS requests all require a user to take deliberate action. In most AI agent interactions, fewer than 5% of users engage with any feedback mechanism.

Behavioral signals are not collected or analyzed. Even when conversation data exists, most enterprises analyze it for system performance rather than for the business signals users are expressing. The churn signal is present in the data. It is simply not being read.

What Do Churn Signals Actually Look Like in AI Agent Conversations?

Churn signals in AI agent conversations fall into five identifiable patterns. Some reflect business-level problems the user is raising through the agent. Others reflect cases where the agent's handling of a sensitive interaction accelerates an at-risk user toward exit. In both cases, the signal is visible in conversation data and invisible in system metrics.

1. Competitive Comparison Statements

Phrases like "your competitors offer better rates," "I heard Company X handles this differently," or "I've been comparing options" are business-level churn signals. The user is not dissatisfied with the agent. They are in a decision window about the product or service itself, and they are expressing it through the agent conversation. When the agent responds with a generic acknowledgment rather than substantively addressing the concern, an opportunity to retain that user is lost, and the signal goes unread.

2. Repeated Task Failures Across Sessions

A user who asks the same question across multiple sessions and receives a vague, deflecting, or incorrect answer each time will not file a complaint. They will stop returning. Unlike the other signal types, repeated task failure is a case where agent performance is directly contributing to the problem. The product was deployed to solve a specific task, and it is not solving it. That failure accumulates across sessions until the user gives up.

3. Mid-Conversation Escalation Requests

"Can I speak to a human?" or "I'd rather talk to someone directly" mid-task can signal two different things. Sometimes the user's issue is genuinely complex and requires human judgment. Sometimes it reflects eroded trust in the agent's ability to handle the interaction. Either way, high escalation request rates within specific task categories indicate that a task type is not being handled adequately, whether the root cause is the agent's capability or the complexity of the underlying business problem.

4. Conversation Abandonment Without Resolution

When a user stops responding mid-thread without reaching a resolution, something failed. It may be the agent's handling of the interaction, or it may be that the user raised a concern the agent could not meaningfully address. No feedback is submitted either way. No signal is logged in standard monitoring systems. Across a large user base, abandonment rate by task type is a leading indicator of where business-level friction is concentrating, and where it is going unaddressed.

5. Price and Value Challenges

Any statement surfacing the words "expensive," "overpriced," "not worth it," or referencing a specific competitor's pricing is a retention signal about the business, not the agent. The user is telling the agent something the business needs to hear. If the agent's response does not engage with the concern substantively, and most generic responses do not, the interaction closes without the business ever knowing the signal was there.

Why Do Standard AI Usage Metrics Miss Churn Signals?

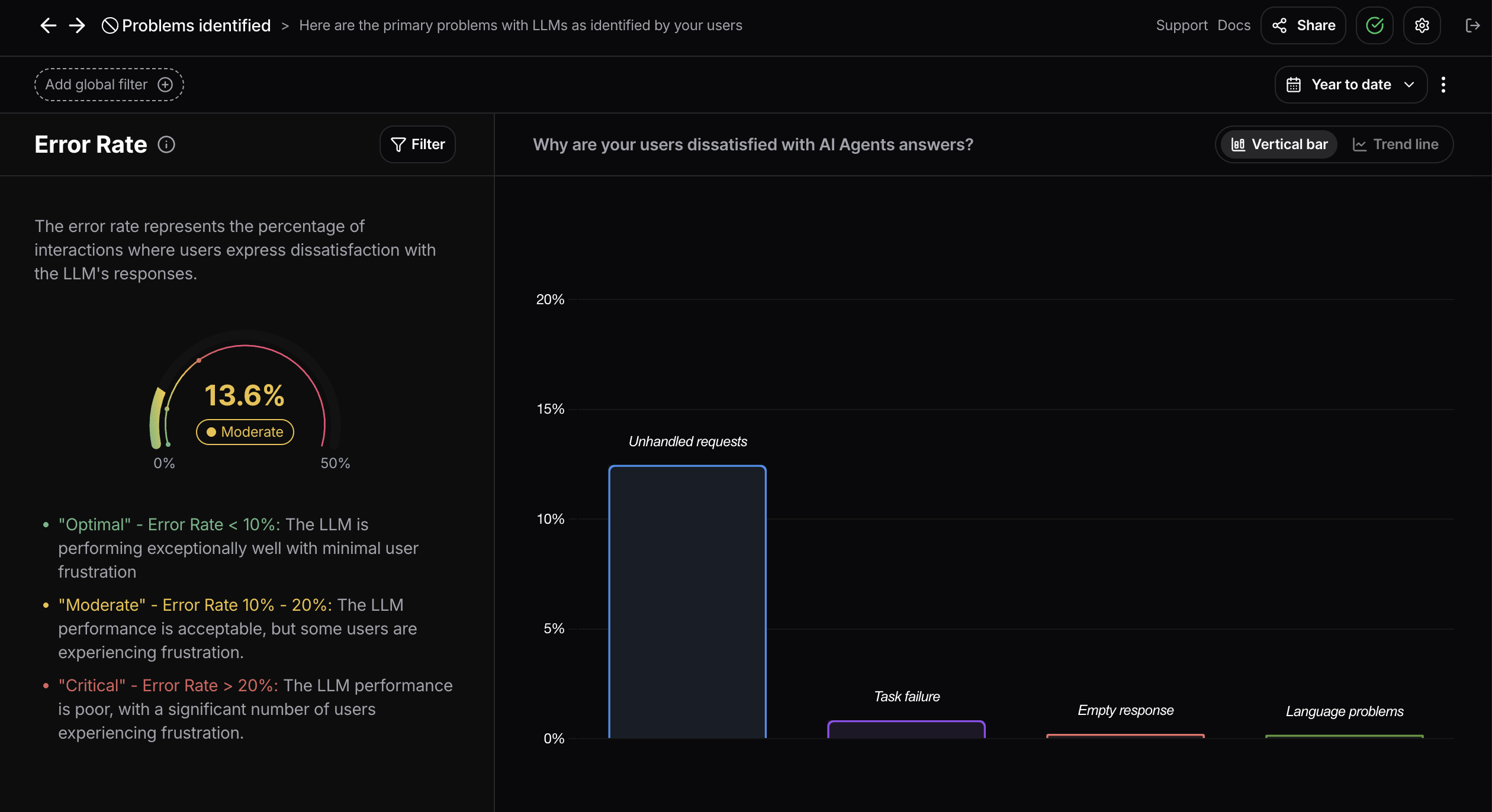

Standard AI monitoring tracks infrastructure: response latency, uptime, token consumption, error rates, and model performance scores. These metrics answer the question "is the system working?"

They do not answer the question "what are users telling the agent, and are those conversations being handled in a way that protects retention?"

In the competitive pricing example, every infrastructure metric would show a successful interaction. The agent responded within normal latency. No errors were logged. Tokens were consumed as expected. The system worked. The business-level signal was missed completely. A user in a decision window received a response that acknowledged their concern without addressing it.

What is missing is task-level analysis: the ability to measure whether specific categories of agent interactions are handling the signals users express in ways that lead to resolution rather than silent exit.

Task-level analysis requires reading conversation content, not just system telemetry. It requires identifying behavioral patterns like the five types above across thousands of interactions, without relying on users to self-report. This is the gap between observability (knowing the system is running) and user analytics (knowing what users are actually expressing, and whether it is being handled).

What Does Task-Level Visibility Enable That Standard Monitoring Does Not?

When enterprises can measure how specific task categories are being handled across agent conversations, three capabilities become available that are not possible with infrastructure monitoring alone.

Identifying which task categories carry the most unread churn risk. An agent may handle billing inquiries at a 91% resolution rate while competitive objection handling closes without substantive engagement 66% of the time. That discrepancy is invisible in system metrics but measurable in conversation data. Knowing where business-level signals are being missed tells product and customer success teams exactly where to intervene.

Connecting conversation patterns to retention outcomes. Users who raise a competitive pricing concern and receive a generic response have a measurably different 30-day retention rate than users whose concern is addressed substantively. Establishing this correlation allows customer success teams to prioritize proactive outreach based on conversation behavior rather than waiting for renewal risk to appear in CRM data.

Quantifying the business impact of handling improvements. If competitive objection handling improves from generic acknowledgment to substantive engagement, and users who receive that handling retain at a higher rate, the revenue impact is calculable. This is the connection between how an agent handles business-level signals and measurable business outcomes, a connection that infrastructure monitoring cannot establish.

How Can Teams Start Measuring Churn Signals in AI Agent Conversations?

Detecting churn signals in AI agent conversations requires shifting the measurement frame from system performance to conversation outcome. Practically, this involves three steps.

Define task categories explicitly. Map the agent's primary interaction types into discrete task categories. For a customer service agent, this might include billing inquiries, technical troubleshooting, competitive objection handling, onboarding support, and cancellation requests. Each category needs a definition of what a successful outcome looks like in conversation data before it can be measured.

Identify behavioral signals that indicate whether business-level concerns were addressed. For each task category, define what a substantive response looks like versus a deflecting one. For competitive objection handling, failure patterns include generic acknowledgments, no engagement with the specific concern the user raised, and conversation abandonment following the agent's response.

Track outcomes over time and by segment. Measure how each task category resolves across conversation volume. Look for categories where abandonment rates are elevated, where escalation to human agents is disproportionately high, or where the same signals recur without resolution. These are the categories where churn risk is concentrating in your data right now.

About Nebuly

Nebuly is the user analytics platform for AI agents. Nebuly measures task-level success and failure patterns across agent conversations without relying on explicit user feedback. It connects agent conversation outcomes to business results including user retention, productivity, and cost savings.

Sources: thinkJar customer experience research; CRM Buyer, "Tackling Silent Churn With Agentic AI in Customer Support," February 2026.