ICONIQ Capital just released their 2026 State of AI report, surveying 300 software executives on how they're building and scaling AI products. The findings confirm what we've been seeing in conversations with AI product teams: the market has shifted. The question is no longer "can we build this?" Now it's "can we prove this works?"

The gap between deployment and value is real. And it's measurable.

The Numbers Tell a Story

88% of enterprises use AI. Only 39% see measurable profit. That gap exists because of visibility.

The ICONIQ data shows where this gap lives:

On evaluation: Only 52% use automated evaluation frameworks. The rest? 77% rely on user feedback, 69% on manual testing. When you ask teams "which tasks are succeeding and which are failing," most can't answer with data.

On cost: Model inference becomes the dominant cost driver as products scale. It's 23% of total costs. Teams are running multi-model strategies (averaging 3.1 providers) to balance cost, latency, and reliability. But without task-level visibility, they can't see which tasks are expensive versus cheap, which models to route where.

On ROI: 83% measure AI impact through productivity gains, cost savings, or revenue. But the report shows 37% of companies plan to change their AI pricing in the next year. Why? Margin erosion (34%), customer demand for consumption-based pricing (46%), and the need to prove ROI at the task level (29%).

You can't price on outcomes if you can't measure which outcomes your AI is actually delivering.

Agentic AI: The Next Scaling Challenge

40% of companies with $500M+ revenue are actively deploying AI agents. Another 35-45% are experimenting with pilots.

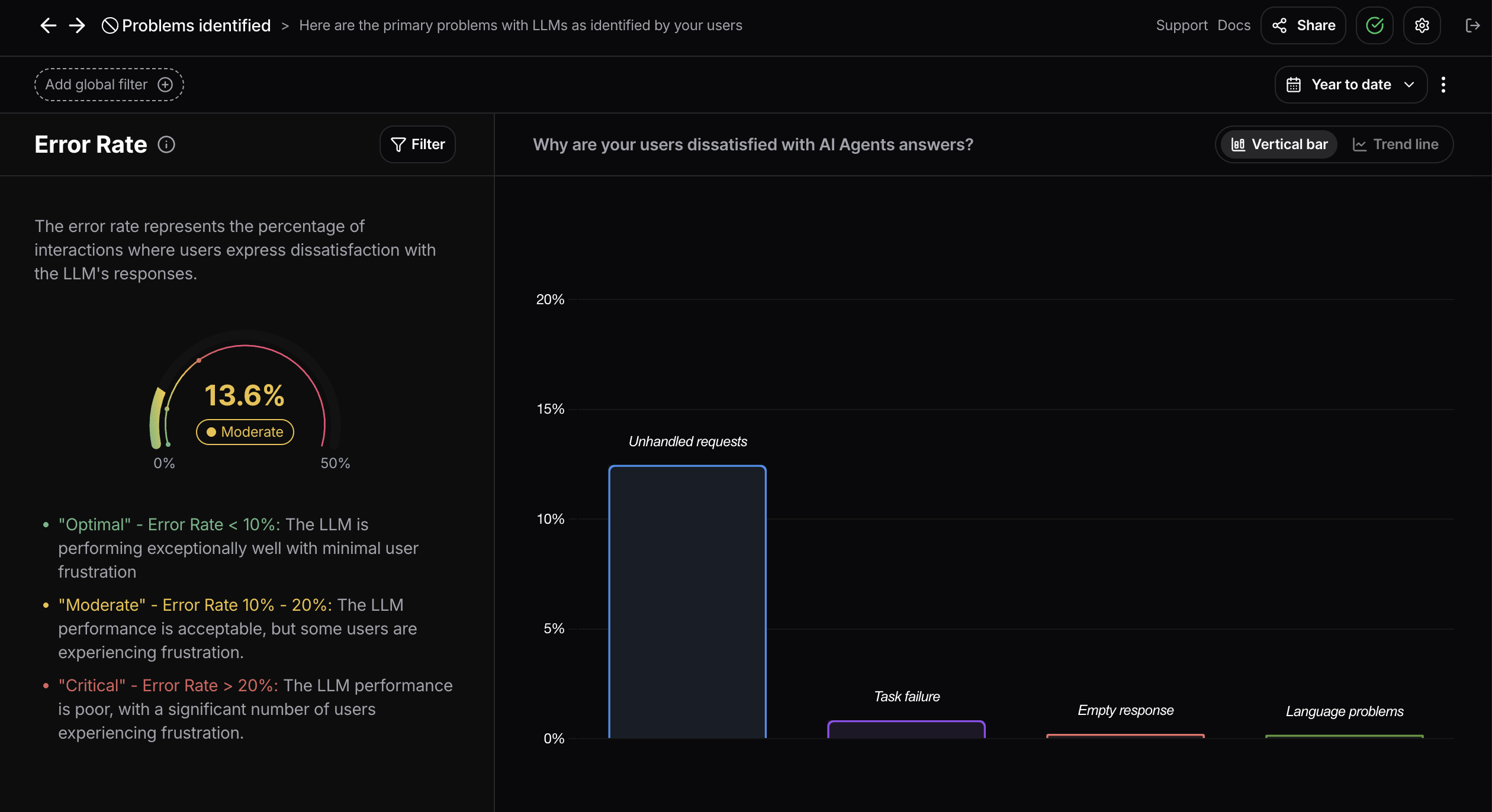

But here's the pattern we see (and ICONIQ's data validates): most of these deployments don't have visibility into which agent tasks are succeeding versus failing. They have system metrics. Latency, uptime, tokens. They don't have task-level success metrics.

The report shows that agentic workflows are being deployed for vertical solutions and GTM use cases. Teams are granting agents read/write permissions (63% for infra/developer tools). But when something fails, they find out from user complaints, not from their analytics.

That's the gap. Agents make autonomous decisions. Users either trust those decisions or they don't. If you can't see which tasks users are trusting and which they're overriding, you're flying blind.

The Evaluation Problem

ICONIQ's data shows only 52% of companies use automated evaluation frameworks. The top evaluation methods are user feedback (77%) and manual testing (69%).

This works in early pilots. It doesn't scale.

When you're running 50+ agent tasks across production, you need automated visibility into which tasks succeed, which fail, and why they're failing. User feedback is reactive. Behavioral signals are predictive.

The companies that figure out how to see task-level success patterns before users complain are the ones that will scale from 23% to majority adoption.

Cost and Margins Under Pressure

The report shows gross margins improving: 41% (2024) → 45% (2025) → 52% (2026 projected). But model inference costs are climbing as products scale.

Teams need to understand:

- Which tasks are expensive to run?

- Which models to route which tasks to?

- Where are inference costs bleeding margins?

Without task-level cost visibility, teams optimize infrastructure without optimizing business outcomes. You end up with great latency metrics and eroding margins.

What This Means for AI Product Teams

The ICONIQ report validates what we're hearing from teams building AI products:

Before: "Did we ship the AI?"

Now: "Which tasks work? What's the ROI?"

The market has moved from deployment to proof. Companies that can answer these questions will scale. Companies that can't will stay stuck in pilots.

Here's what we're seeing separate scaling teams from stalled teams:

- Task-level visibility. Not just "total usage" but "which specific tasks are succeeding versus failing."

- Automated evaluation. Behavioral signals that show failure patterns before users complain.

- ROI connection. Linking task success to productivity gains, cost savings, adoption metrics.

The companies that have this can justify consumption-based pricing, prove outcomes to customers, and iterate on what actually matters. The ones that don't are still optimizing latency while adoption plateaus.

The Shift Is Here

ICONIQ's report shows the AI market entering an "execution era." Success in 2026 means proving AI works at scale, profitably, with measurable outcomes.

Most teams have system metrics. Almost none have task-level success metrics. That's the gap.

The companies that close it first will have a massive advantage. The ones that don't will stay stuck in the 49-point gap between deployment (88%) and profit (39%).

Want to see how Nebuly helps AI product teams measure task-level success and ROI? Get in touch or read more about our approach to user analytics for AI agents.

Full ICONIQ Capital report available here: 2026 State of AI Bi-Annual Snapshot