As the adoption of Large Language Models (LLMs) accelerates, especially in production environments, the need for comprehensive monitoring becomes critical. LLMs, like any machine learning model, can behave unpredictably once deployed, leading to performance degradation, user dissatisfaction, cost surprises and potential security risks. Monitoring these models in real-time is essential to ensuring their long-term success. In this post, we will explore LLM monitoring, typical metrics used, and why user experience monitoring is a crucial aspect of LLM observability.

What is LLM Monitoring?

LLM monitoring refers to the continuous tracking of key performance metrics of large language models to ensure they function as expected in production environments. This process not only includes tracking traditional machine learning performance indicators but also focuses on user interactions, satisfaction, and system-level resource usage.

In the MLOps (Machine Learning Operations) lifecycle, LLM monitoring serves as the cornerstone for identifying performance issues, detecting data drift, and ensuring the model continues to deliver accurate and relevant responses over time. Without continuous monitoring, LLMs can degrade in accuracy or relevance, leading to unsatisfactory user experiences and decreased business value.

Key Metrics for LLM Monitoring

Monitoring LLMs involves tracking several metrics that provide insights into how well the model is performing in a live environment. These metrics can be divided into two main categories: model performance and user experience.

1. Model Performance Metrics:

- Latency and Throughput: The time it takes for the model to respond to a query (latency) and how many queries it can handle at once (throughput) are critical for real-time applications. Slow responses can frustrate users and hinder the overall experience.

- Accuracy and Relevance: While traditional metrics like accuracy and precision are useful, tracking the relevance of LLM-generated content is crucial. As the environment changes, LLMs might produce less useful or off-topic responses, requiring real-time adjustment.

- Resource Utilization & Cost: Monitoring CPU, GPU, and memory usage helps ensure the LLM operates efficiently. High resource consumption could lead to cost implications, system slowdowns or outages, which need to be addressed to maintain smooth operations.

2. User Experience Metrics:

- User Sentiment Analysis: Monitoring the tone and sentiment of user interactions can reveal valuable insights into how users perceive the LLM’s responses. Repeated negative sentiment might indicate that the model’s output is unhelpful or confusing.

- Engagement and Retention: Tracking how long users interact with the LLM and how often they return can give clues about its usefulness. High levels of user frustration or disengagement could point to a mismatch between the LLM’s outputs and user needs.

- Conversation Flow and Topic Analysis: Understanding the flow of conversation between the LLM and users helps ensure the model is addressing key user concerns and queries effectively. Poor topic transitions or unrelated responses can reduce overall satisfaction.

Why LLM Monitoring is Crucial in Production Environments

Once an LLM is deployed in production, it interacts with real users in unpredictable ways. While the training environment is controlled, the live environment can introduce factors such as shifts in user behavior, changes in input data, or unexpected edge cases. Also the base LLM performance can vary over time, if used through an API.

In production, the stakes are much higher, as poor model performance can directly affect the user experience, lead to decreased engagement, and even pose risks to brand reputation. Continuous monitoring helps to:

- Detect Model Drift: LLMs can suffer from data drift (changes in input data) and concept drift (changes in the relationship between inputs and outputs). Monitoring helps catch these issues early, enabling teams to retrain or update models as needed.

- Optimize for Real-World Performance: The LLM's theoretical performance can differ significantly from its real-world performance. Monitoring ensures that metrics like latency, throughput, and user satisfaction remain optimal, even as the model encounters new scenarios.

The Importance of User Experience Monitoring

In LLM-powered products, user experience is often the defining factor of success. While traditional model metrics like accuracy and latency are important, they don’t fully capture the human aspect of interacting with LLMs. User experience monitoring shifts the focus to how people engage with the model, providing insight into satisfaction, emotional response, and overall product value.

Key aspects of user experience monitoring include:

- Detecting Frustration: If users repeatedly ask the same questions or express dissatisfaction, it’s a signal that the LLM’s responses are not meeting expectations.

- Understanding Engagement Levels: Monitoring how long users interact with the LLM and how often they return can inform product teams about the model’s effectiveness and relevance.

- Identifying Sentiment Patterns: Real-time sentiment analysis can alert teams to negative experiences before they escalate into broader product issues.

LLM Monitoring Tools

Nebuly is a unique platform designed to support LLM monitoring with a strong emphasis on user experience. While traditional LLM observability tools focus on model-level metrics like accuracy, recall, or resource utilization, Nebuly shifts the focus towards understanding user behavior and satisfaction.

Here’s why Nebuly stands out as the go-to LLM monitoring tool:

User-Centric Monitoring:

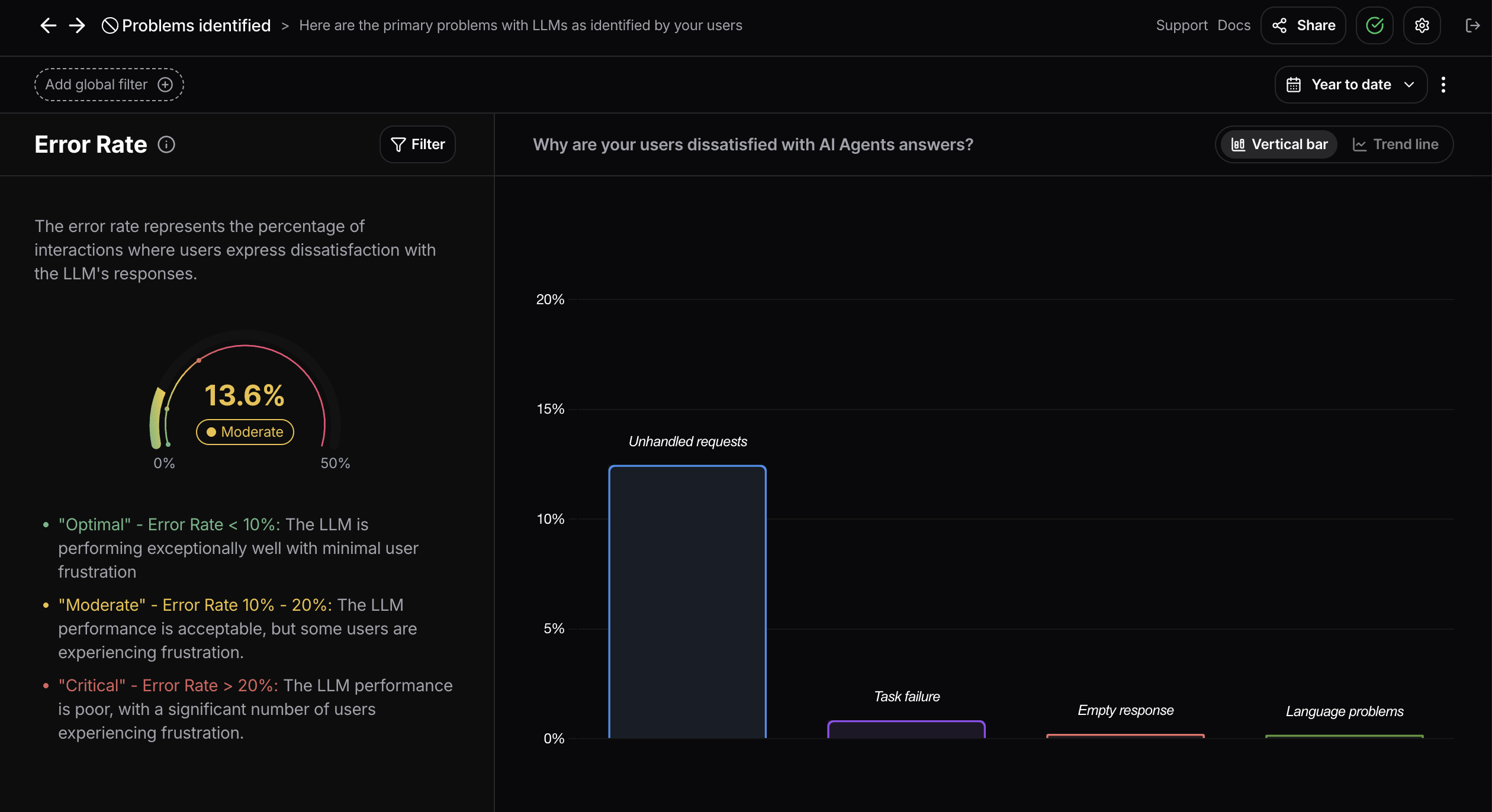

Nebuly allows developers to monitor critical aspects of user behavior, such as satisfaction, sentiment, and engagement. It provides insights into how users interact with the LLM, making it easier to identify unhelpful responses, detect issues with malicious intent, and refine the overall user experience.

Actionable Insights:

Nebuly offers more than just metrics. It provides actionable insights into improving LLM performance by highlighting problem areas in user interactions. Whether users are facing repeated frustration or disengagement, Nebuly helps pinpoint these issues for rapid resolution.

Real-Time Feedback Loops:

With Nebuly, developers can continuously improve their LLM-powered products by running A/B tests on different prompts, adjusting model configurations, and evaluating real-time feedback. This ensures that the model evolves in response to changing user behavior and requirements.

Comprehensive Privacy and Integration:

Nebuly’s platform can be deployed locally, ensuring that sensitive user data remains secure and is not transmitted to external servers. Alternatively, an API/ SaaS deployment is also available. It integrates seamlessly with your existing LLM infrastructure, making it easy to incorporate into your production workflows without disrupting current operations.

Conclusion

Monitoring LLMs in production environments is essential for ensuring long-term model performance and delivering a seamless user experience and business success. Traditional model-level metrics are important, but the true key to success lies in understanding and improving user satisfaction. Nebuly provides the perfect toolset for LLM developers, offering comprehensive user experience monitoring and actionable insights to continuously improve their AI-powered products.

If you're ready to take your LLM monitoring to the next level, request a demo with Nebuly today and see how it can transform your user experience strategy.