The 95-5 divide

Generative AI hit peak hype in 2024. By 2025, the reality started to set in.

Organizations spent hundreds of millions rolling out internal copilots, customer-facing chatbots, and AI assistants. McKinsey data shows 88% of companies now use AI in at least one business function. This sounds like success. It isn't.

The problem is what happens after the launch. Most teams have no visibility into whether anyone is actually using the tool. No way to know if it's working. No way to know why it's sitting unused. So they default to measuring what they can see. System uptime. Response latency. Token throughput. Cost per inference.

These metrics tell you if the engine is running. They don't tell you if anyone is driving.

MIT researchers documented the scale of the divide. After all that investment, only about 5% of organizations are extracting real business value from AI. The other 95% are stuck in pilot mode or seeing only surface-level wins. A bit faster here. A task slightly automated there. But nothing that moves the needle on revenue, margin, or competitive advantage.

Gartner warns that 30% of GenAI projects will be abandoned by the end of 2025. Not because the models are bad. Because teams can't prove the investment is working.

But here's what's important: the companies that did scale AI didn't have access to better models or bigger budgets. They had something else. A different way of measuring success.

Learned 1: The metrics that actually mattered stopped being just about systems

For decades, IT teams measured software success the same way. Availability. Uptime. Performance. If the server was up and the request was fast, the job was done.

This framework broke completely with AI.

An AI system could achieve 99.9% uptime and respond in 200 milliseconds while delivering answers that nobody found useful. The engine ran. People didn't use it.

Teams that succeeded in 2025 made a fundamental shift. They stopped asking "Is the system working?" and started asking "Are people finding this useful?"

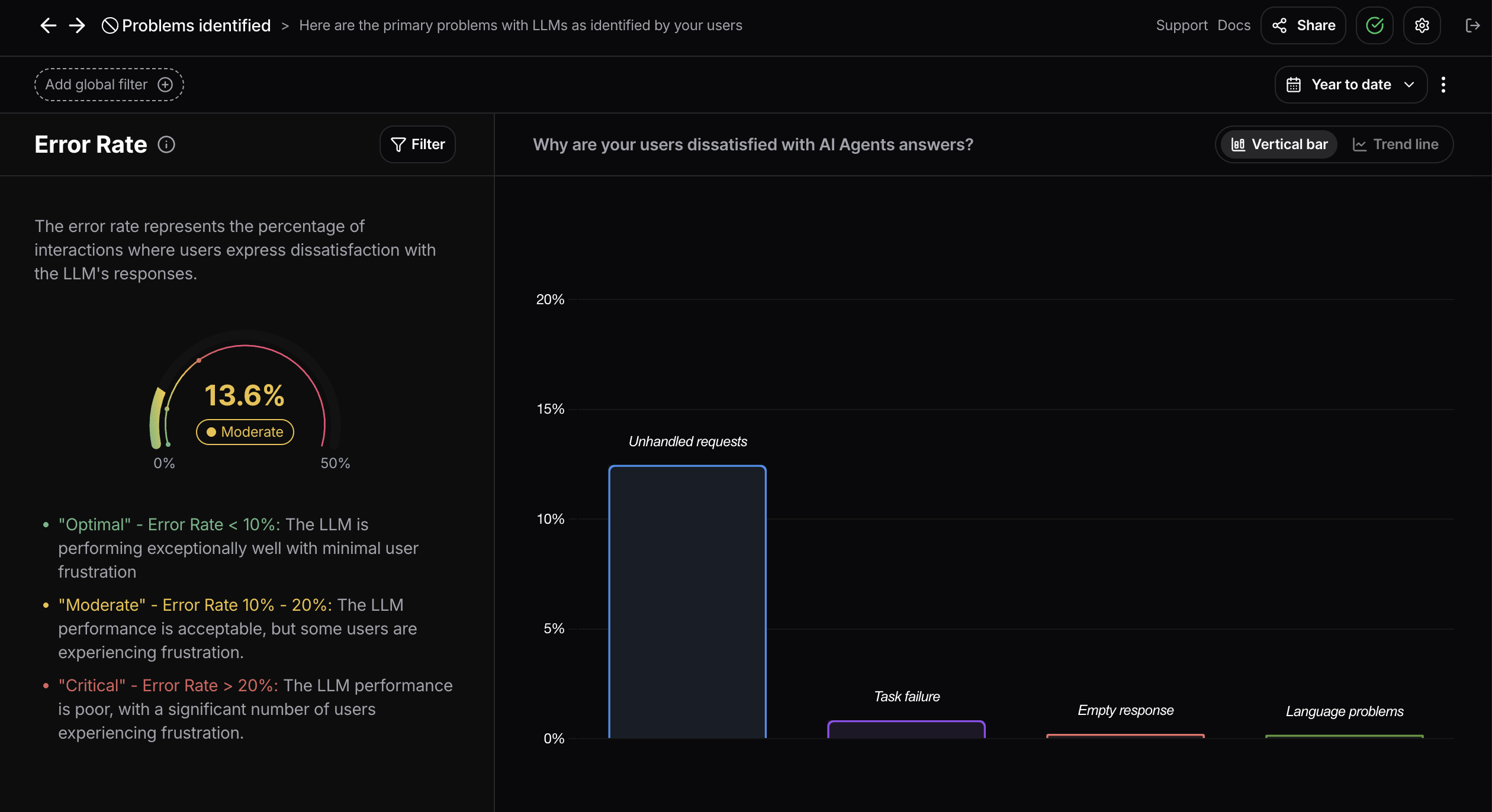

This changed what they measured. Instead of tokens per second, they tracked whether users completed what they set out to do. Instead of latency, they looked at whether the response actually answered the question. Instead of deployment metrics, they watched for where conversations fell apart.

McKinsey data confirms this. Organizations with high-performing AI initiatives report tracking entirely different signals than traditional IT metrics. They measure user activation rates. Session depth. Whether conversations successfully resolved on first attempt or needed escalation. Whether the same user comes back or abandons the tool after one try.

These are human metrics. Not system metrics.

One financial services team discovered this by accident. They launched an internal AI assistant and watched the system dashboard show perfect performance. Full uptime. Sub-300ms response time. Then they looked at actual usage and found that 70% of support requests for the tool weren't about crashes. They were about users not understanding how to phrase their questions to get useful answers.

That insight led to a simple fix. Prompt suggestions. Show users examples of what the AI does well. Suddenly adoption doubled.

They found this through conversation analysis, not infrastructure logs.

Learned 2: Human plus AI workflows beat pure automation

The companies seeing real ROI in 2025 shared something specific. They never designed their AI as a replacement. They designed it as a teammate.

This sounds like corporate language, but the implementation detail matters. It changes everything about how you build, deploy, and iterate on AI.

When teams tried to automate away human work entirely, they almost always failed. The assumption was straightforward enough. Fewer people needed on a task. Lower costs. Higher velocity.

But the failures piled up. Hallucinations went undetected because nobody was checking the AI output. Edge cases the model couldn't handle got buried in error logs instead of triggering redesign. Users distrust built because the AI made mistakes and nobody was there to catch them.

The successful pattern looked different. A healthcare system deployed an AI assistant to help clinical documentation. Rather than removing the physician from the loop, they had the AI draft a note and the physician reviewed and refined it. The same pattern worked for customer service teams. Banking teams. Manufacturing quality control teams.

This design choice created two benefits simultaneously. First, humans caught mistakes and provided immediate feedback, which meant the AI got better fast. Second, users trusted the system because they understood what it was doing and had control. This trust translated directly to adoption.

McKinsey's 2025 survey found that organizations redesigning workflows around human and AI collaboration saw dramatically higher adoption than those attempting full automation. The workflows were still more efficient. The work still got done faster. But because humans stayed in the loop, the organization actually got the adoption that made ROI possible.

Learned 3: Cross-functional teams replaced siloed experiments

In 2024, AI projects often lived in engineering silos. Product had a vision. Security had compliance concerns. Business had revenue targets. These teams rarely talked until something broke.

By 2025, the companies that scaled AI had reorganized. Product, data, security, and business teams sat together from day one. Not as an afterthought. From initial design.

The reason became obvious fast. An internal copilot that looked great in a product demo created compliance violations when real employees used it. A chatbot that improved support efficiency broke apart under load once it hit real traffic. An automated workflow that worked in the lab created legal exposure nobody anticipated until launch.

These weren't technical problems. They were organizational ones. The only way to catch them early was to have the right people in the room.

Writer's 2025 research on enterprise AI adoption shows the cost of silence. At companies without formal cross-functional collaboration, 42% of executives report AI adoption is tearing their organization apart. At companies with intentional collaboration models, that number dropped to single digits. Not because they avoided problems, but because problems got solved before they became crises.

The pattern that worked: structured pods with representatives from product, data, compliance, and business. Weekly iteration cycles instead of quarterly plans. Direct user feedback built into sprint reviews. Shared metrics and shared responsibility for outcomes.

Learned 4: Early feedback loops predicted long-term success

The companies that survived 2025 had learned something that seemed obvious only after the fact. The first month determines everything.

Research from financial services deployments showed a clear pattern. If adoption rates hit 40-60% by day 30, those projects almost always went on to scale. If activation stalled in that window, recovery became nearly impossible. Users who didn't engage early almost never came back.

The difference between success and failure in that first month came down to one thing. Whether teams had visibility into what users were actually doing.

Successful deployments didn't wait for quarterly surveys. They tracked every conversation. They noticed where users got stuck. They caught confusion signals in real time. They fixed problems within days instead of waiting for feedback cycles.

One manufacturing company deploying an AI assistant for equipment maintenance noticed, on day eight, that 40% of queries were about machine types not covered in the initial training data. They identified which machines. They added knowledge. They updated the system by day twelve. When they checked week two metrics, the friction disappeared and adoption accelerated.

The companies without user analytics didn't see this signal until week six, when it was already too late. By then, users had given up and gone back to calling the hotline.

This feedback loop became the competitive moat. Teams that could iterate weekly instead of quarterly started winning. They iterated based on actual behavior, not assumptions. They built better products faster because they were listening.

Gartner data from 2025 supports this. Organizations that established rapid feedback loops during the first 30 days of deployment reported 2-3x faster time to sustained adoption. They also reported better ROI, higher user satisfaction, and lower implementation risk.

What changes as we move into 2026

2025 was the year enterprises stopped experimenting with AI and started executing. It was also the year they stopped measuring execution the wrong way.

The companies that won didn't have fundamentally better AI. They had better ways of understanding whether people actually used it. They moved faster because they iterated on real signals, not system metrics. They scaled because they built feedback loops instead of hoping.

For teams entering 2026, the implication is clear. The gap between winners and everyone else isn't technical anymore. It's organizational. It's about whether you have visibility into how real people interact with real AI in real workflows. It's about whether you can move fast enough to act on what you learn.

The tools exist now. The frameworks are documented. The patterns are proven. The companies that didn't move toward user-centric measurement in 2025 are starting to understand why their pilots aren't scaling. They see the problem clearly now. They just need to do something about it.

Frequently asked questions (FAQs)

You cannot manage what you cannot measure. If you are ready to see what your users are actually telling you we are here to help. Book a demo to see your data clearly.