Large language models have become powerful assistants in search, support and knowledge work. They can generate fluent, factually correct responses. Yet a correct answer may still be dangerous or useless when the assistant does not understand the user’s context.

When correct answers fail the user

Sometimes people ask for information in one context and the assistant responds as if they were in another. Here are a few everyday examples:

- A musician asks for tips on bass because they want to practice the instrument. Someone at a lake asks about bass because they want to catch fish. If the assistant lists fishing lures when the person wants chord progressions, or music theory when the person wants gear for angling, it misses the context.

- A traveler asks for the best apple to eat on a picnic. A software developer asks for the best Apple because they want to buy a laptop. A reply listing MacBooks does not help the person planning a meal.

- A gardener asks how to get rid of bugs on tomato plants. A programmer in a work channel asks how to get rid of bugs in a script. Without context, the assistant might suggest pesticides or code fixes in the wrong situation.

In each case the answer could be factually correct, yet it fails because the product has not captured the user’s intent or emotional state. The problem is not hallucination. It is a gap in understanding the conversation.

Why this happens

Generative AI systems produce output based on patterns in the data they have seen. They do not automatically know why a person asked a question or what they plan to do with the information.

LLM outputs vary with small changes in wording or context and this nondeterministic behavior can impact trust.

Users approach AI systems with different goals and expectations. Mismatches between their intent and the response cause confusion or harm.

How user analytics help

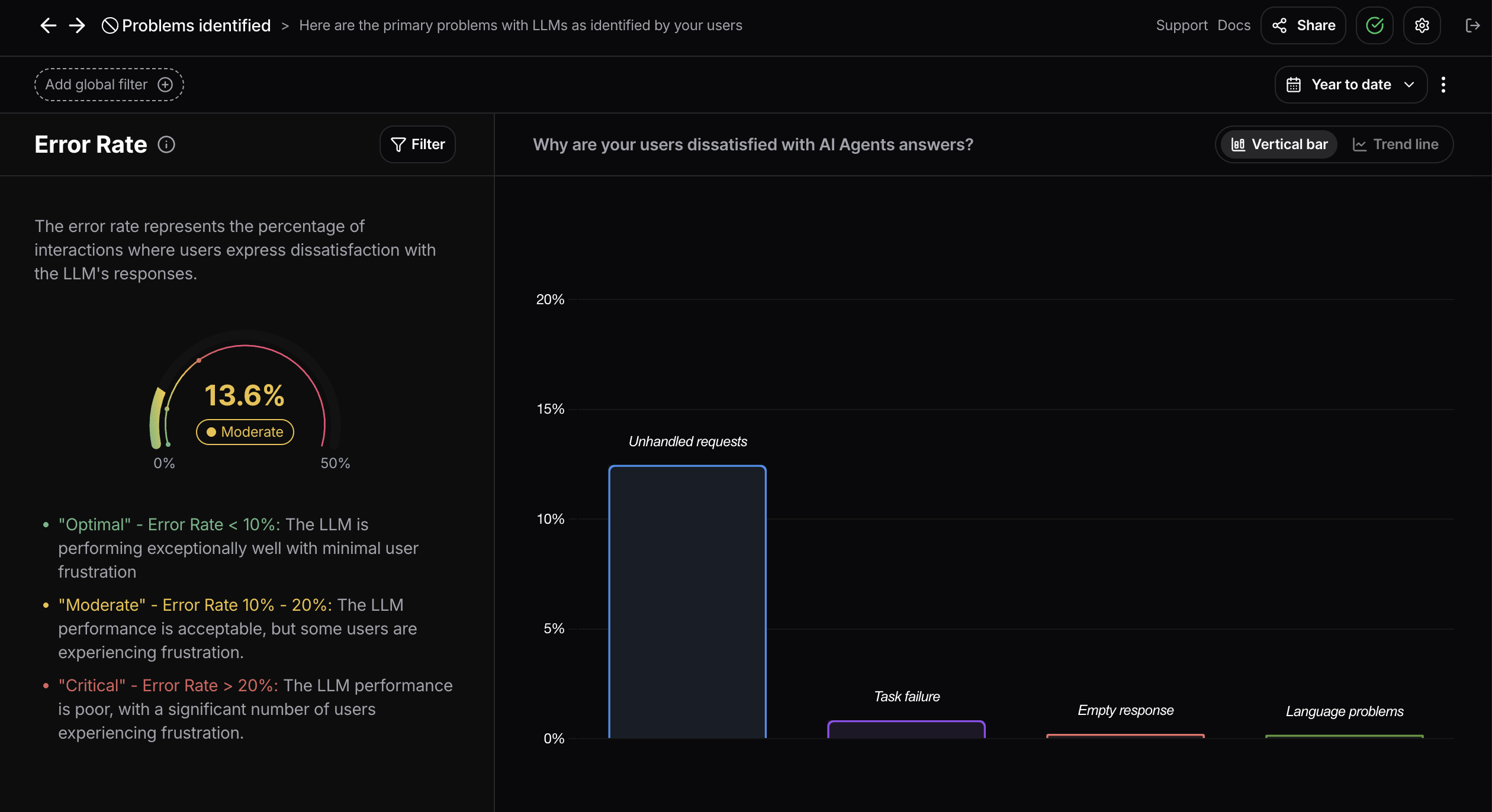

User analytics reveal the human side of every conversation. They go beyond system metrics like latency and error rates to capture intent, sentiment and friction.

For instance, an analytics tool can map the conversation flow to see if a user is seeking instructions or expressing frustration. It can detect emotional cues that suggest distress or urgency. It can track the topics that users discuss and determine whether the model’s responses are relevant.

These signals help teams design safeguards and tailor responses to different contexts.

Designing context aware AI products

To protect users and provide value, AI products must consider context. Teams can achieve this by focusing on a few practices.

1. Collecting implicit feedback, such as how often users rephrase a request or abandon the conversation.

2. Monitoring sentiment to identify when a user is upset, excited or distressed.

3. Mapping conversation flows to understand the task the user is trying to complete.

4. Providing clear instructions for sensitive queries, and routing users to human support when needed.

5. Incorporating human review for high risk topics.

These practices turn raw model output into a user experience that is safe, clear and supportive.

Internal copilots need context too

The importance of context extends to enterprise copilots.

If an engineer asks to “restart the system” during a maintenance conversation, they likely mean a software reboot. If a factory worker asks the same thing on a safety channel, they may refer to a physical machine.

A generic answer could slow down production or cause risks.

User analytics help internal teams understand intent and design workflows that respect domain knowledge and safety requirements.

Build AI you can trust

Correct answers are only part of the equation. Context determines whether an answer helps or harms.

User analytics capture the subtle signals that reveal what users need and how they feel. By combining intent analysis, conversation mapping and sentiment tracking, teams can create AI products that respond with care and clarity.

To learn more about these techniques, explore our guide on product usage analytics. If you want to see how user analytics can make your AI safer and more effective, book a demo today.

.png)