Once your assistant is in production, usage builds up fast. It spreads across teams, countries, and use cases. At that point, surface-level metrics stop being helpful.

Latency and uptime confirm the system is running. But they won’t show if someone gave up halfway through a query. They won’t explain what users are actually trying to do or why the assistant helped or didn’t.

What teams need now is a different kind of signal.

One that shows which tasks are being completed, where users get stuck, and how satisfied they are with the answers.

Feedback tools don’t give enough insight

Most teams try to collect feedback with surveys or thumbs up/down buttons. But response rates are low. We’ve seen assistants handle more than 100,000 chats and collect fewer than 200 feedback signals.

That’s not enough to guide decisions or find friction points.

User analytics gives teams a better way to learn. It turns what users are already doing (prompts, behavior, drop-offs) into real signals.

No need to wait for feedback forms.

You don’t need millions of users to start learning

As soon as an assistant is being used by real people, whether employees or customers, patterns begin to emerge.

We’ve seen companies use user analytics to uncover:

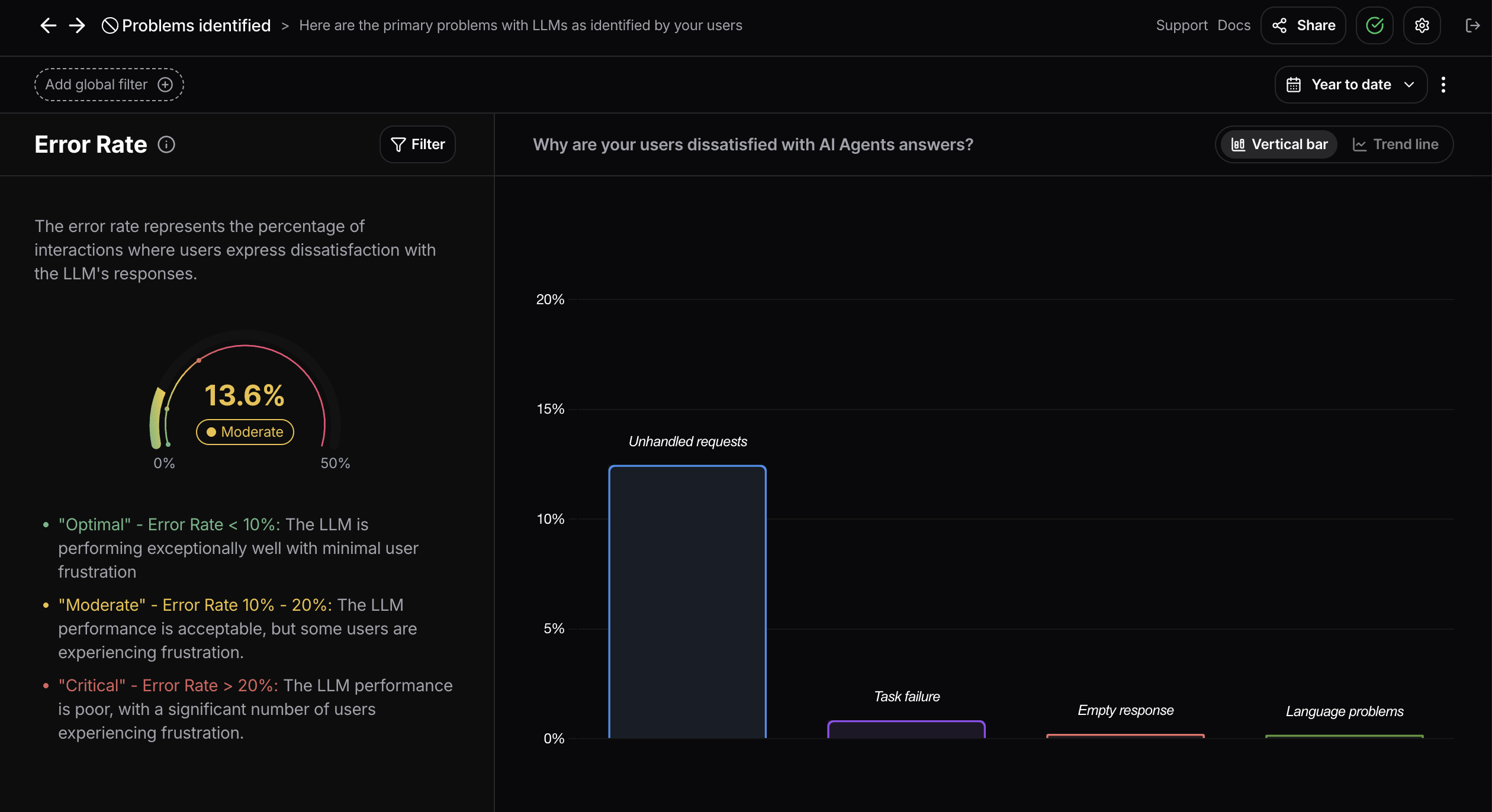

- High error rates tied to specific languages

- Prompting issues that led to targeted prompt training

- Groups with near full adoption, and others with almost none, in the same project

These patterns help teams focus on what needs attention instead of guessing.

This shows up in three common areas

We see this pattern most often in:

- Internal AI copilots: Assistants that help employees search documents, automate tasks, or answer policy questions

- Customer support bots: Assistants that deflect tickets, resolve common issues, or triage handoffs to humans

- End-user product features: GenAI-powered tools that help customers get personalized answers, generate content, or make decisions

In all three, usage data helps teams measure what’s actually happening and what needs attention.

User analytics isn’t just for product teams

Many teams benefit from these insights, depending on how the assistant is used.

- Product and AI use them to improve the assistant experience

- HR teams can see what teams are asking about time-off, policy, or internal tools

- Internal comms spot areas where company messages aren’t clear

- Ops and IT track which departments are adopting the assistant

- Customer support identifies when chatbots succeed and when humans need to step in

- Marketing learns from customer-facing assistants what users care about most

Each team sees how the assistant performs in their context and where to take action.

When the assistant is live, user analytics brings clarity

If your GenAI tools are in production, usage is already generating insights. You don’t need to guess where adoption is happening or what users are struggling with.

User analytics turns that activity into clear, actionable data. It helps teams understand what’s working, what needs attention, and how to improve across the board.

If that’s where you are, it’s time to make the most of it.

Book a demo to see how it works in practice.