A simple truth: usage alone is not success

An internal copilot gets deployed. Users log in. The system runs flawlessly. But six months later, adoption has stalled at 25% and the budget moves to the next project.

The system worked perfectly. The business didn't.

This is the gap that 2025 exposed and 2026 will demand teams fix. Usage numbers look good on a dashboard. But usage without value is just noise. The difference between a successful AI product and an abandoned one is not whether people click it. It is whether they find it worth clicking, whether they come back, and whether their work actually improves.

User signals reveal all of this. They show not what the AI produces. They show what real people do with what the AI produces.

MIT's latest research into enterprise AI deployment calls this the GenAI Divide. The top 5% of organizations see real ROI. The bottom 95% see adoption that goes nowhere. The divide is not about model quality or infrastructure. It is about visibility into human behavior.

User signals vs system metrics: the distinction that matters now

System metrics tell you the engine is running. User signals tell you if anyone cares that it is running.

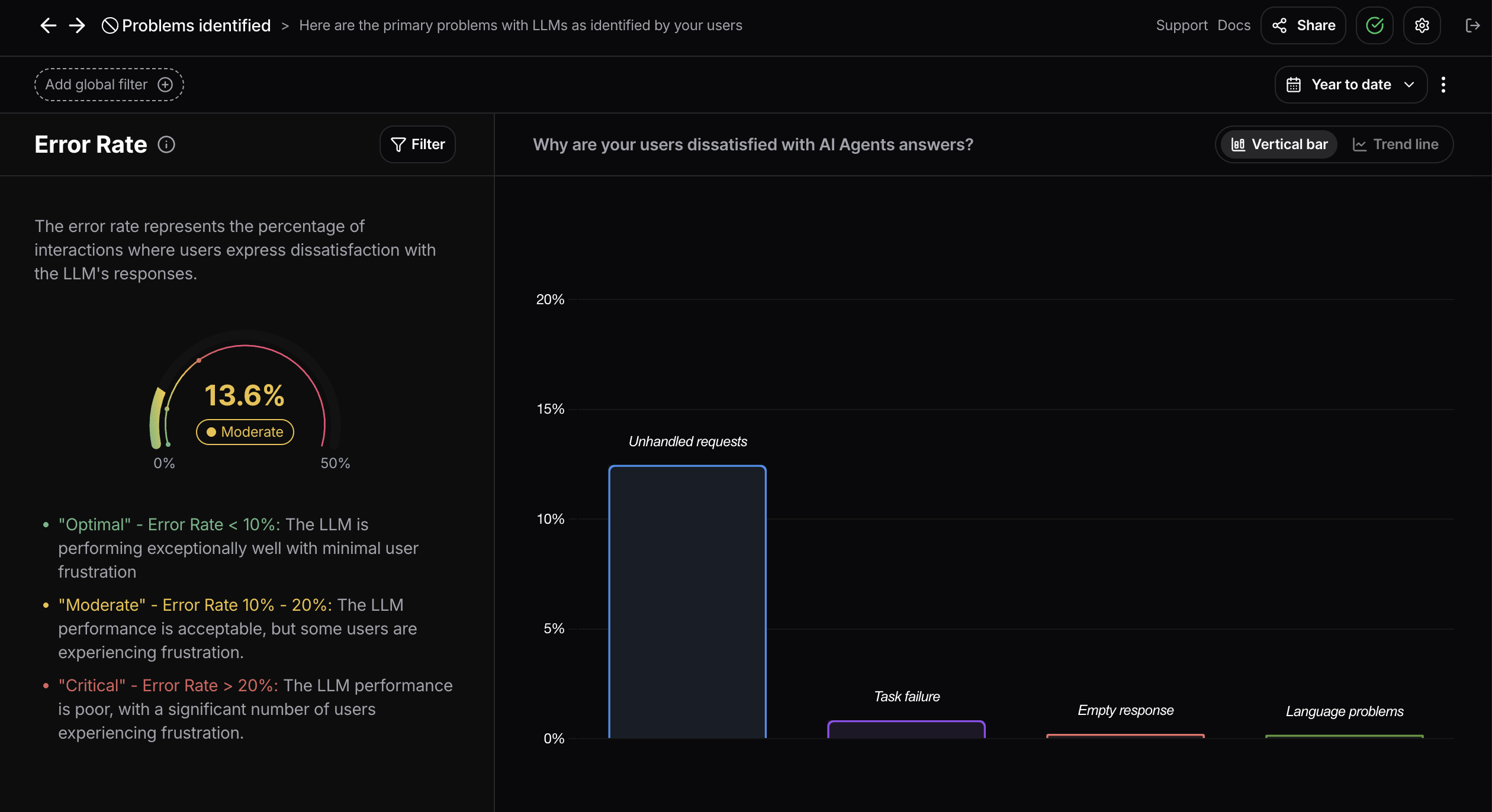

System metrics are clean. Response time under 300ms. Throughput at 1000 requests per minute. Error rate at 0.02%. These live on infrastructure dashboards where engineers live. They answer the question "Is the system working?" Very often, the answer is yes.

User signals are messier. They live in conversations, behavior patterns, and moments of friction. They answer different questions. Is anyone using this? Do they understand how to use it? When they get stuck, what is the actual problem? Do they come back or abandon after the first try? Did this actually help them accomplish something?

A team at a global bank deployed an AI assistant for trading floor analysis. System metrics were flawless. One second response time. 99.99% uptime. Sub-20ms latency. But user signals showed something different. Traders were asking clarifying questions after every response. They were rephrasing queries multiple times. Many were abandoning mid-conversation.

The infrastructure dashboard showed green lights everywhere. The product was failing.

User signals captured what the system could not see about itself.

McKinsey's 2025 State of AI report found that 80% of organizations using AI have seen zero increase in bottom-line impact. Not because their systems were broken. Because they had no visibility into whether people were actually succeeding.

What teams miss when they ignore behavior

Organizations that rely on system metrics alone tend to make the same costly mistakes.

First, they optimize for the wrong things. Speed without accuracy. Throughput without relevance. They prioritize response time when the real blocker is user comprehension. The AI responds in 200ms with an answer the user does not understand. In 800ms with an answer that solves the problem. The faster response wins on dashboards. The slower one wins in reality.

Second, they miss adoption blockers until it is too late. Small friction points that seem trivial in week one become reasons people abandon in week three. A user has to rephrase their question twice. If they have to do it five times across a week, they stop trying. By the time quarterly feedback arrives, the decision is already made. User signals catch this early enough to fix.

Third, they build compliance problems they do not see until auditors do. An AI tool handles customer data that should be encrypted. The system does not crash. Logs do not flag it. But user behavior analysis shows sensitive information entering conversations that should not. Early detection prevents disasters. Late detection becomes a breach.

Fourth, they miss where users actually need help. Product teams plan use cases. Users invent different ones. An AI assistant built for customer support gets used internally for employee training instead. Usage data would show this. Behavior signals explain why this matters. The tool works better for training than support. The roadmap should follow the users, not the plan.

Gartner research on AI adoption shows that organizations tracking user behavior signals catch adoption problems in days. Organizations without them discover issues 4 to 6 weeks later, when momentum is already lost.

Three user signal categories that predict success

Not all signals matter equally. Three categories predict whether an AI product will scale or stall.

Intent accuracy and patterns

Intent is what the user actually came to do. It is the difference between "Can you help me?" and "Help me draft an email to my manager about next quarter's budget that sounds confident but open to feedback."

Most AI systems do not capture intent well. They capture queries. Intent is deeper. It sits in the context, the follow-up questions, the moment when a user rephrases because they were not understood.

Organizations tracking user intent see adoption rates 2.5x higher than those measuring only feature usage. Intent tracking reveals whether the AI is actually solving the right problem or just responding to words on a screen.

A manufacturing company deployed an AI assistant for equipment maintenance. System metrics showed 500 queries per day. Behavior analysis revealed that 40% of queries clustered around a specific machine type that had only been added to the training data two weeks prior. This pattern signal meant the training data was incomplete in obvious ways. The company fixed it within days because they knew what to fix.

Without intent patterns, this data stays invisible. The team thinks the system is working. Usage numbers look normal. Adoption plateaus anyway.

Friction and abandonment points

Friction is everywhere in AI products. Users who do not understand the output. Responses that sound confident but are wrong. Edge cases the model cannot handle. Knowledge gaps the system does not admit.

When friction builds, users do not complain. They leave quietly. This is silent churn. In B2B internal tools, the cost is slower work and lower adoption. In customer-facing AI, the cost is lost revenue and eroded trust.

When users encounter friction, they exhibit detectable patterns. Rephrasing their question more than once. Shorter messages in frustrated tone. Asking for human help. Abandoning mid-task. These are signals, not errors.

A financial services chatbot tracked abandonment points and discovered that 70% of dropped conversations happened at the same place in the workflow. The AI was being asked for compliance advice, and users lost trust in the answer. The fix was not a better model. It was a simple disclaimer and a way to escalate to a human. Adoption doubled because the blocker was removed.

Teams without friction signal visibility assume people just are not trying hard enough. The real issue sits in their data, waiting to be seen.

Gartner found that autonomous agents that fail at their tasks fail in predictable ways. By tracking where agents get stuck or loop on the same action, teams can identify and fix root causes. Carnegie Mellon research showed that the best AI agents complete only 24% of real work tasks. Understanding why is where user signals become critical.

Satisfaction proxies

Explicit satisfaction (thumbs up, surveys, NPS) captures maybe 1% of real experience. The other 99% sits in behavior.

If users return to the tool, satisfaction is happening. If they abandon, something is wrong. If they accept the AI's suggestions, they trust it. If they override everything, they do not. These behavioral proxies are more honest than any survey response.

Research from enterprise AI deployments shows that satisfaction proxies predict 30-day retention with 85% accuracy. If you see acceptance rate above 70%, return rate above 40%, and conversation completion rate above 60% in week one, the product will scale. If these signals are weak, adoption will stall regardless of system performance.

These are not vanity metrics. They are leading indicators that predict whether three months from now, users will be relying on the AI or remembering why they do not.

What this means for product decisions in 2026

The teams that win in 2026 will not be building better models. They will be building better signal systems.

Better visibility into intent means better prioritization. You will know what users actually need because you can see what they actually ask for. Better friction detection means faster iteration. You will fix the real problems, not the ones you assumed existed. Better satisfaction tracking means you will catch adoption issues early when they cost days to fix instead of weeks.

This changes when and how teams make decisions. Quarterly planning becomes weekly optimization. Assumption-driven development becomes behavior-driven development. Launch and hope becomes launch and listen.

The infrastructure side will keep mattering. Latency, uptime, reliability. These do not go away. But they become table stakes, not differentiators. Differentiation moves to the human layer. Do users understand the AI? Do they trust it? Do they keep using it? Can you see why or why not?

Every product roadmap that ignores user signals will stall. Every product roadmap built on them will have a clear path forward. That is the lesson of 2025 that 2026 will make obvious.

Frequently asked questions (FAQs)

Understanding user signals is not about surveillance. It is about survival. If you are ready to see what your users are actually telling you through their behavior, we are here to help. Book a demo to turn conversations into insight.